Content-Based Haptic Texture Retrieval

| Funding Agency: | DFG |

| Duration: | 2 years, 01.03.2016 - 28.02.2018 |

| Contact Persons: | Matti Strese |

Scope of the project

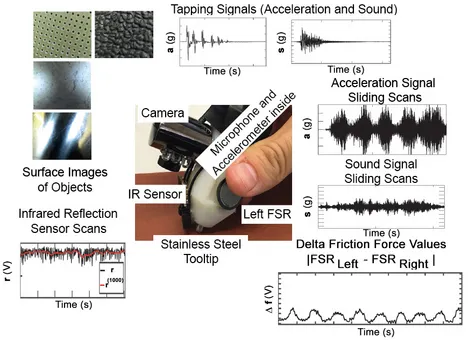

While it is straightforward for humans to describe and characterize the properties of object surfaces after interacting with them [1], technical systems still largely lack such capabilities. Recently, sensorized tools have been proposed to collect tool-surface interaction data to automatically characterize and classify surface materials. In this project, we have developed a tool-mediated haptic texture classification and retrieval system, which is currently able to distinguish among 108 different materials and additionally ranks the materials according to their perceptual similarity. Additionally, the recorded data can be used to form a parametric representation of a material for the artificial haptic display using haptic devices.

Scan-invariant and meaningful perceptual tactile features

There is a wide variety of robust and perceptually relevant features for audio signals and images serving as highly distinctive fingerprints for audio/image recognition and retrieval systems. Scan-invariant features for texture recognition and retrieval based on contact acceleration signals, which are robust against external influences like applied force and scan speed, are currently lacking. With a human performing the surface exploration, well-defined exploration strategies as typically used in robotic texture recognition are not applicable. Humans constantly vary in the applied force and scan speed [2] while stroking a surface texture, which leads to a large variability in the recorded acceleration signals. Hence, scan-invariant features are especially important if such human free hand recordings are used for recognition or retrieval. Hence, the first objective of our research is the development of scan-invariant features for texture recognition and retrieval using unconstrained surface exploration. We plan to first focus on the acceleration signals representing the surface texture. This is motivated by the fact that commonly deployed vibrotactile displays are also based on acceleration signals [3]. Hence, we hypothesize that acceleration signals are also most suitable to determine if two displayed surface textures are felt to be similar. In a second step, audio-visual information can be extracted additionally to support the retrieval of similar textures. To this end, our objective is to evaluate how the information extracted from texture signals, audio signals and surface images can be fused to define highly distinctive features for texture recognition and retrieval.

Development and evaluation of a texture retrieval system

Our second research objective is to develop and evaluate a novel texture retrieval framework. Using a texture signal recorded during free hand exploration as a query texture, the retrieval system returns a list of textures that “feel” similar. Scan-invariant features are essential to build such a system. Furthermore, the features need to capture the perceptual characteristics of the surface textures. It is mandatory to evaluate features defined in our previous work regarding their validity for texture retrieval and, eventually, to redesign them accordingly. This necessitates knowledge about the perceptual similarity and dissimilarity between the textures included in our dataset. However, this information can be gathered only via extensive subjective user experiments. Hence, statistically significant similarity data between textures needs to be established first. With this ground-truth data being available, the retrieval system can be evaluated for different applied features and machine learning approaches. The goal is to develop a system that has a high retrieval performance while keeping the complexity as low as possible. Complexity in this context can be described by the sensory effort, computational effort and, as a result, also by the hardware costs.

[1] K. J. Kuchenbecker, J. Romano, and W. McMahan, “Haptography : Capturing and Recreating the Rich Feel of Real Surfaces,” in The 14th International Symposium on Robotics Research (ISRR), 2009

[2] S. Lederman, R. L. Klatzky, “Haptic perception: A tutorial,” Attention, Perception, & Psychophysics, vol. 71, no. 7, pp. 1439–1459, Oct. 2009.

[3] H. Culbertson, J. Unwin, and K. J. Kuchenbecker, “Modeling and Rendering Realistic Textures from Unconstrained Tool-Surface Interactions,” Transactions on Haptics, vol. 7, no. 3, pp. 381–393, July 2014.