A Camera-based Tactile Sensor for Sorting Tasks

Autonomous robots require sophisticated perception systems to manipulate objects. In this context, cameras denote a rich source of information and are also precise and cheap. Our novel sensor approach uses cameras together with robust image processing algorithms to measure forces and other haptic data.

The sensor system bases on flexible rubber foam without any internal electronics, which is attached on the fingers of a gripper. This flexible material shows a characteristic deformation during contact. The camera observes the foam and image processing algorithms detect the foam’s deformation from the camera images. Finally, our sensor software calculates haptic contact data during the grasp procedure.

This video shows the capabilities of this so-called visuo-haptic sensor in a sorting task. A linear robot with a two-finger gripper sorts plastic bottles based on their compliance. During the grasp procedure, the visuo-haptic sensor measures the contact forces, the pressure distribution along the gripper finger, finger position, the object deformation, and also estimates the object compliance as well as object properties like, e.g., shape and size. The novelty lies in the fact that all this data is extracted from camera images, i.e., the robot is “feeling by seeing”.

More information: https://www.ei.tum.de/lmt/forschung/ausgewaehlte-projekte/bmwi-exist-rovi/

Reproduction of Textures based on Electrovibration

This demonstration presents a novel approach to display textures via electrovibration. We collect acceleration data from real textures using a sensorized tool-tip during controlled scans and captured images of the textures. We display these acceleration data using a common electrovibration setup.

If a single sine wave is displayed, it can be observed that spectral shifts occur. This effect originates from the electrostatic force between the finger pad and the touchscreen. According to our previous observations, when multiple sine waves are displayed interferences occur and acceleration signals from real textures may not feel perceptually realistic.We propose to display only the dominant frequencies from the real texture signal, considering the JND of frequencies, to mitigate this observation. During the demo session, we will let the attendees feel the differences between previously recorded texture signals and our proposed frequency-reduced texture signals. Moreover, the effect of different amounts of dominant frequencies will be shown to the attendees.

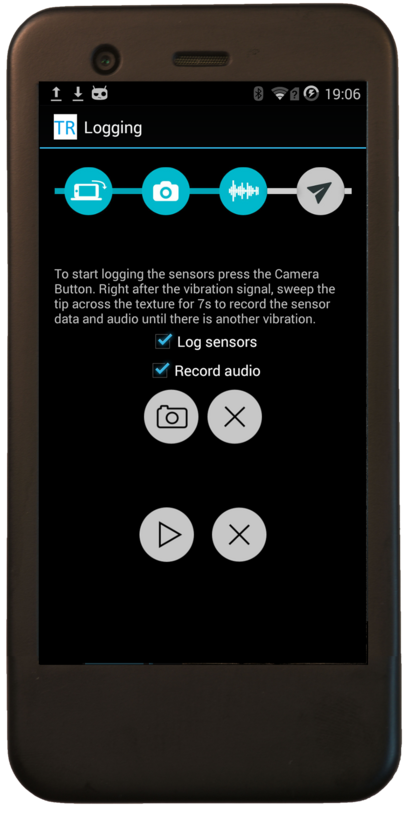

Handheld Surface Material Classification Systems

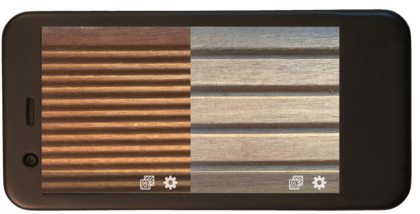

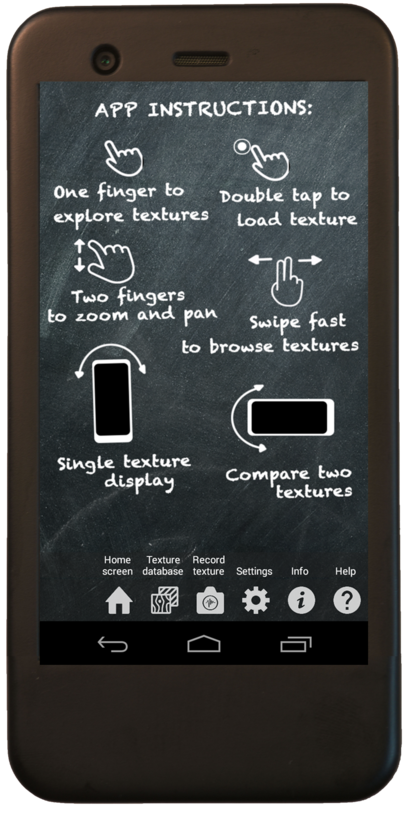

This video presents a content-based surface material retrieval (CBSMR) system for tool-mediated freehand exploration of surface materials. We demonstrate a custom 3d printed sensorized tool and a common smartphone using an android-based surface classification app that are able to classify among a set of surface materials without relying on explicit scan force and scan velocity measurements.

The systems rely on features representing the main psychophysical dimensions of tactile surface material perception, namely friction, hardness, macroscopic roughness, microscopic roughness and warmth from our prior work. We use a haptic database consisting of 108 surface materials as reference for a live demonstration of both devices for the proposed task of surface material classification during the conference.

Tactile Computer Mouse for Interactive Surface Material Exploration

This demo demonstrates the prototype of a novel tactile input/output device for the exploration of object surface materials on a computer. Our proposed tactile computer mouse is equipped with a broad range of actuators to present various tactile cues to a user, but preserves the input capabilities of a common computer mouse.

We motivate the design and implementation of our device by the insights of related work, which reveals the five major tactile dimensions relevant for human haptic texture and surface material perception and provide tactile feedback for these tactile dimensions, namely microscopic/macroscopic roughness, friction, hardness and warmth. We use a haptic database from our prior work to provide the necessary signals (e.g., acceleration, image or sound data) for the recreation of surface materials and display them using our surface material rendering application.

Ultra-Low Delay Video Transmission

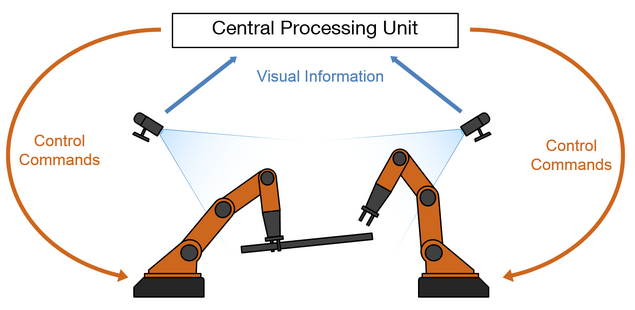

Low delay video transmission is becoming increasingly important. Delay critical, video enabled applications range from teleoperation scenarios such as controlling drones or telesurgery to autonomous control through computer vision algorithms applied on real-time video. Two examples for this are autonomous driving and visual servoing, as depicted in the images below.

For such highly dynamic applications, the end-to-end (E2E) latency of the involved video transmission is critical. Therefore, we analyze the latency contributors in video transmission and research approaches to reduce latency. In our project, we first needed to measure the E2E latency with sub-millisecond precision. We define E2E latency as the time between an event first visisbly taking place in the field of view of the camera and the time when this event is first visibly represented on a display. We developed a system that can perform such a precise measurement in an automated, non-intrusive, portable and inexpensive manner, which no previous system could deliver.

Current work investigates improving the delay measurement system, the delay simulation of video transmission, analyzing the Rate-Distortion-Delay tradeoff in video coding, and a number of measures to reduce delay in video coding. Some of these methods are under patent application which is why we can not provide further details at this time.

Compensating the Effect of Communication Delay in Client-Server-based Shared Haptic Virtual Environments

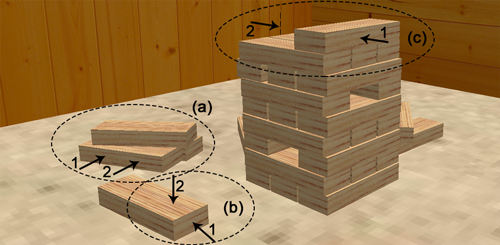

Shared Haptic Virtual Environments can be realized using a client-server architecture. In this architecture, each client maintains a local copy of the virtual environment (VE). A centralized physics simulation running on a server calculates the object states based on haptic device position information received from the clients. The object states are sent back to the clients to update the local copies of the VE, which are used to render interaction forces displayed to the user through a haptic device.

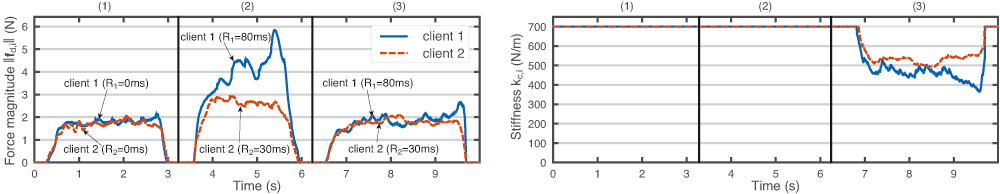

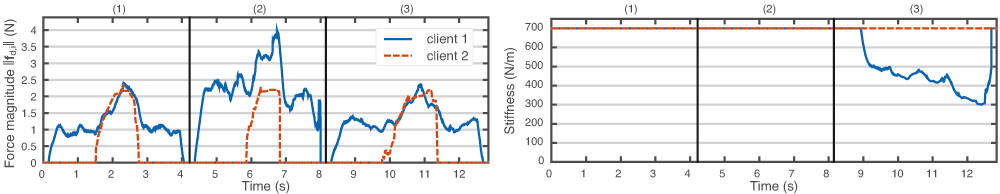

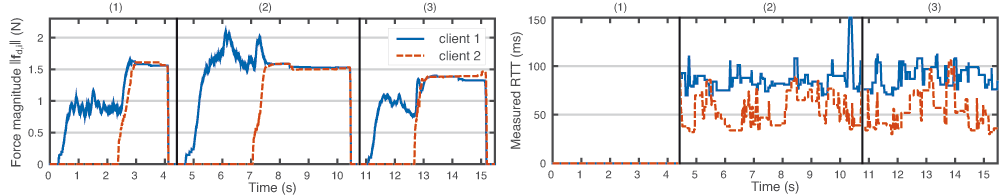

Communication delay leads to delayed object state updates and increased force feedback rendered at the clients. In the work presented in [1], we analyze the effect of communication delay on the magnitude of the rendered forces at the clients for cooperative multi-user interactions with rigid objects. The analysis reveals guidelines on the tolerable communication delay. If this delay is exceeded, the increased force magnitude becomes haptically perceivable. We propose an adaptive force rendering scheme to compensate for this effect, which dynamically changes the stiffness used in the force rendering at the clients. Our experimental results, including a subjective user study, verify the applicability of the analysis and the proposed scheme to compensate the effect of time-varying communication delay in a multi-user SHVE.

Publications

C. Schuwerk, X. Xu, R. Chaudhari, E. Steinbach,

Compensating the Effect of Communication Delay in Client-Server-Based Shared Haptic Virtual Environments,

ACM Transactions on Applied Perception, vol. 13, no. 1, pp. 1-22, December 2015.

The following short video gives some details about the application used to evaluate the proposed schemes and the experimental setup used for the subjective evaluation.

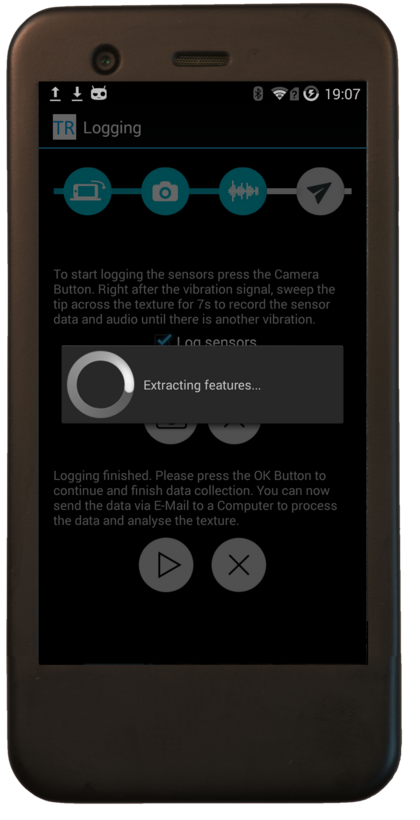

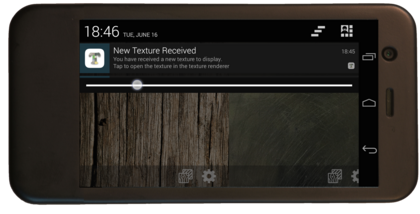

Remote Texture Exploration

Imagine you are using your mobile device to browse the Internet for new furniture, home decoration or clothes. Today’s systems provide us only with information about the look of the products, but how does their surface feel when touched? For the future, we imagine systems that allow us to remotely enjoy the look and feel of products. The impact of such technology could be enormous, especially for E-Commerce. Tangible example applications are product customization, selection of materials, product browsing or virtual product showcases.

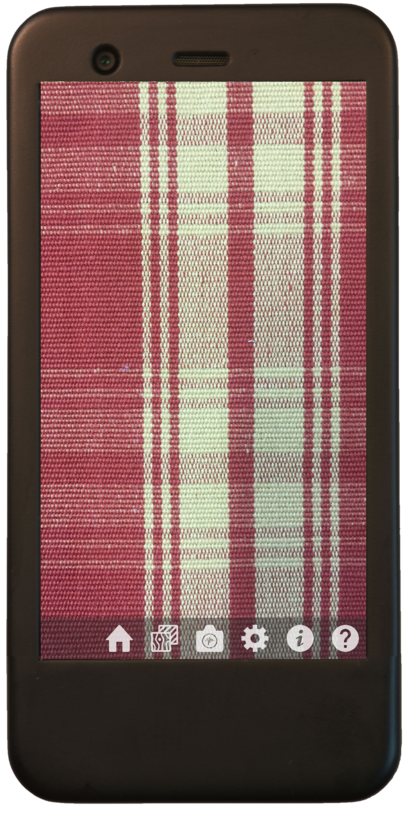

The „Remote Texture Exploration“ app displays surface textures using the TPad Phone, which can be received from a texture database or from remote smartphones. Vibration and audio feedback is included to enrich the user experience. The texture models used to display textures recreate important dimensions of human tactile perception, like roughness or friction. New texture models are created using live recordings from the smartphone sensors (IMU, camera, microphone).

Poster

Video

The following short video introduces the Remote Texture Exploration App:

Computational Haptis Lab - Final Lab Projects 2014

This video is a compilation of the final lab projects implemented by the students during the Computational Haptics Lab in the summer semester 2014.

The Computational Haptics Lab consists of theoretical background and weekly programming tasks. In the last weeks of the lab, the students implement small applications or games in groups of up to two students.

LMT Haptic Texture Database

While stroking a rigid tool over an object surface, vibrations induced on the tool, which represent the interaction between the tool and the surface texture, can be measured by means of an accelerometer. Such acceleration signals can be used to recognize or to classify object surface textures. The temporal and spectral properties of the acquired signals, however, heavily depend on different parameters like the applied force on the surface or the lateral velocity during the exploration. Robust features that are invariant against such scan-time parameters are currently lacking, but would enable texture classification and recognition using uncontrolled human exploratory movements. We introduce a haptic texture database which allows for a systematic analysis of feature candidates. The database includes recorded accelerations measured during controlled and well-defined texture scans, as well as uncontrolled human free hand texture explorations for 69 different textures.

A Visual-Haptic Multiplexing Scheme for Teleoperation over Constant-Bitrate Communication Links

We developed a novel multiplexing scheme for teleoperation over constant bitrate (CBR) communication links. The approach uniformly divides the channel into 1ms resource buckets and controls the size of the transmitted video packets as a function of the irregular haptic transmission events. The performance of the proposed multiplexing scheme was measured objectively in terms of delay-jitter and packet rates. The results were achieved with acceptable multiplexing delay on both the visual and haptic streams. Our evaluation shows that the proposed approach can provide a guaranteed constant delay for the time-critical force signal, while introducing acceptable video delay.

Computational Haptis Lab - final lab projects (summer semester 2013)

This video is a compilation of the final lab projects implemented by the students during the Computational Haptics Lab in the summer semester 2013.

The Computational Haptics Lab consists of theoretical background and weekly programming tasks. In the last weeks of the lab, the students implement small applications or games in groups of up to two students.

Visual Indoor Navigation: Vision-based Localization for Smartphones

This is a live demonstration of the visual indoor localization & navigation system developed for the project NAVVIS at the Institute for Media Technology at Technical University Munich.

The video shows our lab demonstrator running on an Android phone. By analyzing the camera images for distinctive visual features, the position and orientation of the smartphone is recognized and displayed on the map. For this demonstration, only visual information has been used - other localization sources have not been used to improve the vision-based results. In order to compare localization accuracy, Android's network-based position estimate (Wifi and cellular networks) is displayed.

The approach used in this demonstration of a vision-based localization system employs a novel combination of a content-based image retrieval engine and a method to generate virtual viewpoints for the reference database. In a preparation phase, virtual views are computed by transforming the viewpoint of images that were captured during the mapping run. The virtual views are represented by their respective bag-of-features vectors and image retrieval techniques are applied to determine the most likely pose of query images. As virtual image locations and orientations are decoupled from actual image locations, the system is able to work with sparse reference imagery and copes well with perspective distortion.

Publications

R. Huitl, G. Schroth, S. Hilsenbeck, F. Schweiger, E. Steinbach,

Virtual Reference View Generation for CBIR-based Visual Pose Estimation,

ACM Multimedia 2012, Nara, Japan, November 2012.

Video Synchronization in a Cooperative Mobile Media Scenario

Within the scope of a joint research project with Docomo Eurolabs, we have developed and optimized methods for the synchronization of videos in a multi-perspective UGC scenario [1][2][3][4]. The video below presents the problem and explains the solution. It also shows screen casts from the CoopMedia portal, the demonstration platform of the CoopMedia project.

Publications

- F. Schweiger, G. Schroth, M. Eichhorn, A. Al-Nuaimi, B. Cizmeci, M. Fahrmair, E. Steinbach,

Fully Automatic and Frame-accurate Video Synchronization using Bitrate Sequences,

IEEE Transactions on Multimedia, vol. 15, no. 1, pp. 1-14, 2013. - A. Al-Nuaimi, B. Cizmeci, F. Schweiger, R. Katz, S. Taifour, E. Steinbach, M. Fahrmair,

ConCor+: Robust and Confident Video Synchronization using Consensus-based Cross-Correlation,

IEEE International Workshop on Multimedia Signal Processing, Banff, AB, Canada, September 2012. - F. Schweiger, G. Schroth, M. Eichhorn, E. Steinbach, M. Fahrmair,

Consensus-based Cross-correlation,

ACM Multimedia, Scottsdale, AZ, November 2011. - G. Schroth, F. Schweiger, M. Eichhorn, E. Steinbach, M. Fahrmair, W. Kellerer,

Video Synchronization using Bit Rate Profiles ,

IEEE International Conference on Image Processing (ICIP), Hong Kong, September 2010.

TUM Indoor Viewer

This video shows the TUM Indoor Viewer, a web-based panorama image and pointcloud viewer, developed for the NAVVIS project at the Institute for Media Technology at TUM. The viewer provides interactive browsing of more than 40,000 images from the TUMindoor dataset, recorded at the main site of Technische Universität München. The floor plans created by our mapping trolley are displayed on top of a regular maps layer (Google Maps, Satellite or Hybrid). Colored circles on the map and in the 3D view indicate locations where images are available for browsing.

The first part of the video shows views rendered from the panorama images recorded by the Ladybug3 camera system. The following part also shows the pointclouds that have been constructed from laser scanner data, overlaying them on top of the panorama images. Note that alignment between the image and the pointcloud is accurate only when the viewpoint is exactly at the location where the panorama images have been recorded.

Publications

R. Huitl, G. Schroth, S. Hilsenbeck, F. Schweiger, E. Steinbach,

TUMindoor: an extensive image and point cloud dataset for visual indoor localization and mapping,

IEEE International Conference on Image Processing (ICIP 2012), Orlando, FL, USA, September 2012.

Surprise Detection in Cognitive Mobile Robots

Our probabilistic environment representation in [1] enables a cognitive mobile robot to reason about the uncertainty of the environment's appearance and provides a framework for the computation of surprise. The robot is equipped with cameras and captures images along its way through the environment. At a dense series of viewpoints the parameters of probability distributions are infered from the robot's observations. The probability distributions treat the luminance and chrominance as random variables and provide a prior for future observations of the robot. If a perceived luminance or chrominance value changes the prior, surprise is generated.

This video illustrates the acquisition of an image sequence by a mobile robot. The first part of the sequence is used in order to train the prior distributions along the robot's trajectory. In the second part a human puts objects on the table in a certain time interval. The robot computes surprise maps from the captured images and the prior distributions from the internal environment representation and updates the environment model.

This work is supported, in part, within the DFG excellence initiative research cluster Cognition for Technical Systems - CoTeSys.

Publications

W. Maier, E. Steinbach,

A Probabilistic Appearance Representation and its Application to Surprise Detection in Cognitive Robots,

IEEE Transactions on Autonomous Mental Development, vol. 2, no. 4, pp. 267 - 281, Dezember 2010.

Content Based Image Retrieval: CD cover search

This video demonstrates the capabilities of content based image retrieval (CBIR) algorithms when applied to mobile multimedia search. In this scenario we identify various CDs in real-time using low resolution video in a database of more than 390,000 CD covers.

To achieve the low query times required for instant identification of the CD, transmission delay is minimized by performing the feature quantization on the mobile device and sending compressed Bag-of-Features (BoF) vectors. To cope with the limited processing capabilities of mobile devices, we introduced the Multiple Hypothesis Vocabulary Tree [1], which allows us to perform the feature quantization at very low complexity. A further increase of retrieval performance is accomplished by integrating the probability of correct quantization in the distance calculation. By achieving an at least 10 fold speed up with respect to the state-of-the-art, resulting in 12 ms for 1000 descriptors on a Nexus One with a 1 GHz CPU, mobile vision based real-time localization becomes feasible. In combination with the feature extraction as proposed by Takacs et. al [2], which takes 27 ms per frame on a mobile device, extraction and quantization of 500 features can be performed at 30 fps.

Publications

G. Schroth, A. Al-Nuaimi, R. Huitl, F. Schweiger, E. Steinbach,

Rapid Image Retrieval for Mobile Location Recognition,

IEEE International Conference on Acoustics, Speech and Signal Processing, Prague, Czech Republik, Mai 2011.

Mobile Visual Localization

Recognizing the location and orientation of a mobile device from captured images is a promising application of image retrieval algorithms. Matching the query images to an existing georeferenced database like Google Street View enables mobile search for location. Due to the rapidly changing field of view of the mobile device caused by constantly changing user attention, very low retrieval times are essential. These can be significantly reduced by performing the feature quantization on the handheld and transferring compressed Bag-of-Feature vectors to the server. To cope with the limited processing capabilities of handhelds, the quantization of high dimensional feature descriptors has to be performed at very low complexity. To this end, we introduced the Multiple Hypothesis Vocabulary Tree (MHVT) as a step towards real-time mobile location recognition. Our experiments demonstrate that our approach achieves query times reduced by up to a factor of 10 when compared to the state-of-the-art.

This algorithm is part of the visual location recognition framework developed at the Institute for Media Technology, which is demonstrated in the video.

Publications

G. Schroth, A. Al-Nuaimi, R. Huitl, F. Schweiger, E. Steinbach,

Rapid Image Retrieval for Mobile Location Recognition,

IEEE International Conference on Acoustics, Speech and Signal Processing, Prague, Czech Republik, Mai 2011.

Web-based Human Machine Interface

We work on a flexible in-vehicle Human-Machine-Interface (HMI) that is based on a service-oriented architecture (SOA) enriched by graphical information. Our approach takes advantage of standard web technologies and targets at a solution that can be flexibly deployed in various application scenarios as, e.g., for in-vehicle driver-assistance systems, in-flight entertainment systems or generally spoken, systems which allow multiple persons to interact with an HMI.

Furthermore, we consider the integration of consumer electronic devices. The proposed system benefits from its SOA-driven, generic approach and comes along with advantages like platform-independency, scalability, faster time to market and lower price compared to application-specific approaches.

In this video we show an excerpt of some of our new improvements in the area of HMI Generation.

This work is supported, in part, by the BMBF funded research project SEIS

Projektpraktikum Multimedia - Cooperative Haptic Games - Results Winter Semester 2009/2010

This video is a compilation of the games developed during the course "Projektpraktikum Multimedia" in the winter term 2009/10.

Besides the haptic input and force feedback, an important aspect of the developed games is the need for cooperation among the participating players in order to achieve a common goal.

The haptic controller used in this video is the Phantom Omni by Sensable Technologies.

Establishing Multimodal Telepresence Sessions with Session Initiation Protocol (SIP) and Advanced Haptic Codecs

With recent advances in telerobotics and the wide spread of Internet communication, a flexible framework for initiating, handling and terminating Internet-based telerobotic sessions becomes necessary. In our work, we explore the use of standard Internet session and transport protocols in the context of telerobotic applications. Session Initiation Protocol (SIP) is widely used to handle multimedia teleconference sessions with audio, video or text, and provides many services advantageous for establishing flexible dynamic connections between heterogeneous haptic interfaces and telerobotic systems. We apply this paradigm to the creation and negotiation of haptic telepresence sessions and propose to extend this framework to work with the haptic modality. We introduce the notion of a "haptic codec" for transforming haptic data into a common, exchangeable data format, and easy integration of haptic telepresence data reduction and control approaches. We further explore using the Real-Time Transport Protocol (RTP), a standard protocol for audio/video streaming media, for transport of teleoperation data. To demonstrate the proposed session framework a proof-of-concept system was implemented. Software for the prototype is based on the Open Phone Abstraction Library (OPAL). OPAL's media handling routines were extended to include a haptics media type, alongside audio and video media types. A haptic device interface wrapper was developed using the OpenHaptics API, and the "haptic raw" media format was linked to the device with OPAL's device interface API. The demonstrator successfully established haptics, audio, and video media streams, transmitting haptics data at close to 1kHz.

IT_Motive 2020 Demonstrators

While some decades ago, technical innovation in the automotive industry has generally been based on mechanical improvements, today’s car innovations are mainly (by about 90 %) driven by electronics and software. Since software and electronics have much faster innovation cycles than a car’s typical lifecycle, there’s an increasing amount of time in a car’s life in which the built in electronic and software components don’t meet the owner’s constraints. Additionally, it takes a significant amount of time for car manufacturers to test innovations that are new to the market and integrate them in their new products. To make things worse, today’s car IT architectures suffer from the demand of expandability, since they are built upon many different custom products (e.g. up to seven different busses, about 50 - 70 different electronic control units (ECUs)). In our work, a novel car IT architecture based on general purpose hardware and an ethernet based communication network was introduced.

The architecture has been developed within a 3 year interdisciplinary research project "IT_Motive 2020" based on the Car@TUM cooperation between the Technische Universität München, the BMW Group and the BMW Group Forschung und Technik GmbH. The part of the TUM was represented by the Lehrstuhl für Kommunikationsnetze, Lehrstuhl für Medientechnik, Lehrstuhl für Integrierte Systeme, Lehrstuhl für Datenverarbeitung, Lehrstuhl für Realzeit-Computersysteme and Fachgebiet Höchstfrequenztechnik.

Image-based Environment Modeling at AUTOMATICA 2008

At the international trade fair for automation and mechatronics AUTOMATICA we demonstrated in 2008 the acquisition of image-based environment representations using a mobile robot platform. A Pioneer 3-DX, which was equipped with two cameras, captured a dense set of images of a typical household scene which contained two glasses. Due to the refraction of light and specular highlights it is usually tedious to acquire acceptable virtual representations of glasses. This video shows that our representation, which stores the densely captured images and, in addition, a depth map and the camera pose for each image, allows for the computation of realistic virtual images in a continuous viewpoint space. The positions where the robot captured the images are illustrated by white and colored squares in the sequence of virtual images. At the end of the video, the application of virtual view interpolation to surprise detection is shown.

The visual localization of the camera images was done by our project partners Elmar Mair and Darius Burschka from Informatik VI, Faculty of Informatics, TU München.

This work is supported, in part, within the DFG excellence initiative research cluster Cognition for Technical Systems - CoTeSys.