Open Theses

Important remark on this page

The following list is by no means exhaustive or complete. There is always some student work to be done in various research projects, and many of these projects are not listed here.

Don't hesitate to drop an email to any member of the chair asking for currently available topics in their field of research. Or you can email to this email-ID, which will be automatically broadcasted to all members of the chair.

Also, subscribe to the chair's open thesis topics list. So that when a new topic is posted, you can get a notification. Click here for the subscription.

Abbreviations:

- PhD = PhD Dissertation

- BA = Bachelorarbeit, Bachelor's Thesis

- MA = Masterarbeit, Master's Thesis

- GR = Guided Research

- CSE = Computational Science and Engineering

Cloud Computing / Edge Computing / IoT / Distributed Systems

Summary:

In the current technological landscape, Generative AI (GenAI) workloads and models have gained widespread attention and popularity. Large Language Models (LLMs) have emerged as the dominant models driving these GenAI applications. Most of LLMs are GPT-like architectures that consist of multiple Decoder layers. In this project, we study the performance and sustainability that LLMs can gain by using Advanced Matrix Extensions (AMX) CPU technology. AMX is a new CPU accelerator technology that is targeting the AI workloads performance enhancement.

Testing Environment:

Intel Labs in Bangalore

Project Period:

6 to 9 months

Contact:

TUM: Prof. Michael Gerndt

Intel Labs: Mohamed Elsaid, Ph.D (mohamed.elsaid@intel.com)

Serverless computing (FaaS, Function as a Service) is emerging as a new paradigm and execution mode for the next generation of cloud-native computing due to many advantages, such as cost-effective pay-per-use benefits, high scalability, and ease of deployment. Serverless function is designed to be fine-grained and event-driven so that they can scale elastically to accommodate workload changes. However, current public serverless computing platforms are only supporting CPU instead of accelerators, such as GPU and TPU. With the increasing number of deep learning applications in the cloud, it is imperative to offer GPU support.

Moreover, the utilization of current GPUs within many Kubernetes-based serverless computing platforms is suboptimal. This is primarily due to the prevalence of functions centered around deep learning inferences, which often fail to effectively harness the full capacity of a GPU. As a result, there is a pressing need to establish more fine-grained GPU-sharing mechanisms for serverless functions. Presently, various GPU sharing approaches exist, such as rCUDA [1], cGPU [2], qGPU [3], vCUDA[4], MIG[5], and FaST-GShare[6]. Each of these mechanisms possesses distinct advantages in terms of GPU sharing. Without a proper understanding of these mechanisms, it becomes challenging to select an appropriate sharing solution for users.

We aim to delve into these advantages comprehensively and design a unified platform that integrates these mechanisms and automatically selects specific mechanisms for different functions according to the unique attributes for high GPU utilization and function SLO guarantee.

Similar platform: https://github.com/elastic-ai/elastic-gpu

[2] https://github.com/lvmxh/cgpu

https://www.alibabacloud.com/zh/solutions/cgpu

[3] https://www.tencentcloud.com/document/product/457/42973?lang=en&pg=

https://github.com/elastic-ai/elastic-gpu

[4] https://github.com/tkestack/gpu-manager

Lin Shi, Hao Chen and Jianhua Sun, "vCUDA: GPU accelerated high performance computing in virtual machines," 2009 IEEE International Symposium on Parallel & Distributed Processing, Rome, 2009, pp. 1-11, doi: 10.1109/IPDPS.2009.5161020.

[5] https://docs.nvidia.com/datacenter/cloud-native/kubernetes/latest/index.html#

https://www.nvidia.com/en-us/technologies/multi-instance-gpu/

https://docs.nvidia.com/datacenter/tesla/mig-user-guide/index.html

Goals:

1. Choose any two mechanisms and integrate them on the serverless computing platform based on the elastic GPU framework.

2. Prototype the solution on GCP.

Requirements

- Basic knowledge of FaaS platforms. Knowledge of Knative/OpenFaaS is beneficial.

- Knowledge of docker, K8s.

- Tensorflow or PyTorch.

- Basic Knowledge of CUDA and NVIDIA GPU.

We offer:

- Thesis in the area that is highly demanded by the industry

- Our expertise in data science and systems areas

- Supervision and support during the thesis

- Access to different systems required for the work

- Opportunity to publish a research paper with your name on it

What we expect from you:

- Devotion and persistence (= full-time thesis)

- Critical thinking and initiativeness

- Attendance of feedback discussions on the progress of your thesis

Apply now by submitting your CV and grade report to Jianfeng Gu (jianfeng.gu@tum.de).

The topic will focus on vertical scaling and horizontal scaling for deep learning inference applications in serverless computing platforms. Currently, the scaling in K8s(Kubernetes) and serverless frameworks mainly utilizes horizontal scaling. However, deep learning (DL) applications usually have large parameter models that consume significant amounts of GPU memory. Horizontal scaling for DL apps entails that each replica needs to load a copy of model parameters, thereby exacerbating memory consumption issues. Meanwhile, most dedicated inference engines and systems in the cloud, like NVIDIA Triton, Kserve, KubeRay, and, etc. , are using vertical scaling, which refers to allocating more GPU resources to a replica to meet the increasing request load. Both types of scaling have their own advantages. The topic will revolve around designing an auto-scaling system for deep learning applications that supports hybrid auto-scaling in serverless computing platforms, enabling the system to achieve SLO-aware and seamless scaling. The technique will include the Batch system, Tensor migration and relative Algorithms in auto-scaling (vertical and horizontal) mechanism in K8s, and Tensor storage.

Goals:

1. Propose a vertical scaling/batch mechanism based on horizontal scaling in serverless computing/K8s for more efficient and SLO-aware deep learning inference as well as improving GPU utilization.

2. Experients and Analysis.

Requirements

- Familiar with C++, Python, Linux Shell, K8s, Container.

- Familiar with Tensorflow or PyTorch.

- Basic Knowledge of CUDA and NVIDIA GPU.

We offer:

- Thesis in the area that is highly demanded by the industry

- Our expertise in data science and systems areas

- Supervision and support during the thesis

- Access to different systems required for the work

- Opportunity to publish a research paper with your name on it

What we expect from you:

- Devotion and persistence (= full-time thesis)

- Critical thinking and initiativeness

- Attendance of feedback discussions on the progress of your thesis

Apply now by submitting your CV and grade report to Jianfeng Gu (jianfeng.gu@tum.de).

Currently, more and more autonomous driving systems are using end-to-end model frameworks [1] [2] [3], and the size of these model parameters is increasing. However, the GPU resources available in vehicles are very limited. Efficiently deploying large end-to-end models on these GPUs is a highly challenging task, requiring more rational and finer-grained resource allocation optimization to improve GPU utilization and task throughput. This research will investigate how to design and implement an efficient and general automatic GPU resource allocation optimization mechanism based on end-to-end model frameworks and fine-grained GPU resource reuse.

[1] https://github.com/OpenDriveLab/End-to-end-Autonomous-Driving

[2] https://developer.nvidia.com/blog/end-to-end-driving-at-scale-with-hydra-mdp/

https://github.com/NVlabs/Hydra-MDP

[3] https://github.com/OpenDriveLab/UniAD

Goals:

1. Deploy end-to-end autonomous driving framework in the resource-limited GPU device.

2. Design an automatic fine-grained GPU allocation mechanism/optimization method for end-to-end autonomous driving frameworks.

3. Experients and Performance Analysis.

Requirements

- Familiar with C++, Python, Linux Shell.

- Tensorflow or PyTorch.

- Basic Knowledge of CUDA and NVIDIA GPU.

We offer:

- Thesis in the area that is highly demanded by the industry

- Our expertise in data science and systems areas

- Supervision and support during the thesis

- Access to different systems required for the work

- Opportunity to publish a research paper with your name on it

What we expect from you:

- Devotion and persistence (= full-time thesis)

- Critical thinking and initiativeness

- Attendance of feedback discussions on the progress of your thesis

Apply now by submitting your CV and grade report to Jianfeng Gu (jianfeng.gu@tum.de).

Background

With the rapid development of Cloud in the recent years, attempts have been made to bridge the widening gap between the escalating demands for complex simulations to be performed against tight deadlines and the constraints of a static HPC infrastructure by working on a Hybrid Infrastructure which consists of both the current HPC Clusters and the seemingly infinite resources of Cloud. Cloud is flexible and elastic and can be scaled as per requirements.

The BMW Group, which runs thousands of compute-intensive CAEx (Computer Aided Engineering) simulations every day needs to leverage the various offerings of Cloud along with optimal utilization of its current HPC Clusters to meet the dynamic market demands. As such, research is being carried out to schedule moldable CAE workflows in a Hybrid setup to find optimal solutions for different objectives against various constraints.

Goals

- The aim of this work is to develop and implement scheduling algorithms for CAE workflows on a Hybrid Cloud on an existing simulator using meta-heuristic approaches such as Ant Colony or Particle Swarm Optimization. These algorithms need to be compared against other baseline algorithms, some of which have already been implemented in the non-meta-heuristic space.

- The scheduling algorithms should be a based on multi-objective optimization methods and be able to handle multiple objectives against strict constraints.

- The effects of moldability of workflows with regards to the type and number of resource requirements and the extent of moldability of a workflow is to be studied and analyzed to find optimal solutions in the solution space.

- Various Cloud offerings should be studied, and the scheduling algorithms should take into account these different billing and infrastructure models while make decisions regarding resource provisioning and scheduling.

Requirements

- Experience or knowledge in Scheduling algorithms

- Experience or knowledge in the principles of Cloud Computing

- Knowledge or Interest in heuristic and meta-heuristic approaches

- Knowledge on algorithmic analysis

- Good knowledge of Python

We offer:

- Collaboration with BMW and its researchers

- Work with industry partners and giants of Cloud Computing such as AWS

- Solve tangible industry-specific problems

- Opportunity to publish a paper with your name on it

What we expect from you:

- Devotion and persistence (= full-time thesis)

- Critical thinking and initiativeness

- Attendance of feedback discussions on the progress of your thesis

The work is a collaboration between TUM and BMW

Apply now by submitting your CV and grade report to Srishti Dasgupta (srishti.dasgupta@bmw.de)

Background: Social good applications such as monitoring environments require several technologies, including federated learning. Implementing federated learning expects a robust balance between communication and computation costs involved in the hidden layers. It is always a challenge to diligently identify the optimal values for such learning architectures.

Keywords: Edge, Federated Learning, Optimization, Social Good,

Research Questions:

1. How to design a decentralized federated learning framework that applies to social good applications?

2. Which optimization parameters need to be considered for efficiently targeting the issue?

3. Are there any optimization algorithms that could deliver a tradeoff between the communication and computation parameters?

Goals: The major goals of the proposed research are given below:

1. To develop a framework that delivers a decentralized federated learning platform for social good applications.

2. To develop at least one optimization strategy that addresses the existing tradeoffs in hidden neural network layers.

3. To compare the efficiency of the algorithms with respect to the identified optimization parameters.

Expectations: The students are expected to have an interest to develop frameworks with more emphasis on federated learning; they have to committedly work and participate in the upcoming discussions/feedbacks (mostly online); they have to stick to the deadlines which will be specified in the meetings.

For more information, contact: Prof. Michael Gerndt (gerndt@tum.de) and Shajulin Benedict (shajulin@iiitkottayam.ac.in)

Modeling and Analysis of HPC Systems/Applications

Background:

The end of Dennard scaling, slowing down of Moore's law, as well as emerging applications such as LLMs caused considerable changes in HPC hardware architectures. One such example can be seen in FPUs, i.e., a variety of arithmetic operations with different precisions are now available on modern HPC processors. This is because (1) arithmetic precision and performance (or energy efficiency) are in a trade-off relationship; and (2) it is well-known that emerging ML applications generally do not need higher precisions such as the traditional FP32/64.

Goal and Approach:

You will explore the use of lower precision arithmetics in scientific computing, with a particular focus on FFTs (Fast Fourier Transforms). To this end, you will look into FFT kernels used in HPC applications developed in Plasma-PEBS project (https://cordis.europa.eu/project/id/101093261) or DarExa-F project (https://gauss-allianz.de/de/project/title/DaREXA-F), and will explore the use of lower precision arithmetics. More specifically, you will modify those kernels to test various precision combinations for the variables used in them (per loop or at their definition) and will observe performance, energy, as well as the error of the simulation output (compared with FP64) using various inputs. You will pick up several hardware available in CAPS Cloud (https://www.ce.cit.tum.de/caps/hw/caps-cloud/) or LRZ Beast machines (https://www.lrz.de/presse/ereignisse/2020-11-06_BEAST/) to test your codes.

Requirements:

- C/Fortran programming experiences

- In genreral, we would be very happy with guiding anyone self-motivated, capable of critical thinking, and curious in computer science.

- We don't want you to be too passive – you are supposed to think/try yourself to some extend, instead of fully following our instructions step by step.

- If your main goal is passing with any grade (e.g., 2.3), we'd suggest you look into a different topic.

- If you are interested in getting a PhD degree in HPC, this will be a good topic.

Contact:

Dr. Eishi Arima, eishi.arima@tum.de, https://www.ce.cit.tum.de/caps/mitarbeiter/eishi-arima/

Prof. Dr. Martin Schulz

Background:

HPC systems are becoming increasingly heterogeneous as a consequence of the end of Dennard scaling, slowing down of Moore's law, and various emerging applications including LLMs, HPDAs, and others. At the same time, HPC systems consume a tremendous amount of power (can be over 20MW), which requires sophisticated power management schemes at different levels from a node component to the entire system. Driven by those trends, we are studing on sophisticated resource and power management techniques specifically tailored for modern HPC systems, as a part of Regale project (https://regale-project.eu/).

Research Summary:

In this work, we will focus on co-scheduling (co-locating multiple jobs on a node to minimize the resource wastes) and/or power management on HPC systems, with a particular focus on heterogeneous computing systems, consisting of multiple different processors (CPU, GPU, etc.) or memory technologies (DRAM, NVRAM, etc.). Recent hardware components generally support a variety of resource partitioning and power control features, such as cache/bandwidth partitioning, compute resource partitioning, clock scaling, power/temperature capping, and others, controllable via previlaged software. You will first pick up some of them and investigate their impact on HPC applications in performance, power, energy, etc. You will then build an analytical or emperical model to predict the impact and develop a control scheme to optimize the knob setups using your model. You will use hardware available in CAPS Cloud (https://www.ce.cit.tum.de/caps/hw/caps-cloud/) or LRZ Beast machines (https://www.lrz.de/presse/ereignisse/2020-11-06_BEAST/) to conduct your study.

Requirements:

- Basic knowledges/skills on computer architecture, high performance computing, and statistics

- Basic knowledges/skills on surrounding areas would also help (e.g., machine learning, control theory, etc.).

- In genreral, we would be very happy with guiding anyone self-motivated, capable of critical thinking, and curious about computer science.

- We don't want you to be too passive – you are supposed to think/try yourself to some extend, instead of fully following our instructions step by step.

- If your main goal is passing with any grade (e.g., 2.3), we'd suggest you look into a different topic.

See also our former studies:

- Urvij Saroliya, Eishi Arima, Dai Liu, Martin Schulz "Hierarchical Resource Partitioning on Modern GPUs: A Reinforcement Learning Approach" In Proceedings of IEEE International Conference on Cluster Computing (CLUSTER), pp.185-196, Nov. (2023)

- Issa Saba, Eishi Arima, Dai Liu, Martin Schulz "Orchestrated Co-Scheduling, Resource Partitioning, and Power Capping on CPU-GPU Heterogeneous Systems via Machine Learning" In Proceedings of 35th International Conference on Architecture of Computing Systems (ARCS), pp.51-67, Sep. (2022)

- Eishi Arima, Minjoon Kang, Issa Saba, Josef Weidendorfer, Carsten Trinitis, Martin Schulz "Optimizing Hardware Resource Partitioning and Job Allocations on Modern GPUs under Power Caps" In Proceedings of International Conference on Parallel Processing Workshops, no. 9, pp.1-10, Aug. (2022)

- Eishi Arima, Toshihiro Hanawa, Carsten Trinitis, Martin Schulz "Footprint-Aware Power Capping for Hybrid Memory Based Systems" In Proceedings of the 35th International Conference on High Performance Computing, ISC High Performance (ISC), pp.347--369, Jun. (2020)

Contact:

Dr. Eishi Arima, eishi.arima@tum.de, https://www.ce.cit.tum.de/caps/mitarbeiter/eishi-arima/

Prof. Dr. Martin Schulz

Description:

Benchmarks are an essential tool for performance assessment of HPC systems. During the pro-

curement process of HPC systems both benchmarks and proxy applications are used to assess

the system which is to be procured. New generations of HPC systems often serve the current

and evolving needs of the applications for which the system is procured. Therefore, with new

generations of HPC systems, the selected proxy application and benchmarks to assess the sys-

tems’ performance are also selected for the specific needs of the system. Only a few of these

have stayed persistent over longer time periods. At the same time the quality of benchmarks

is typically not questioned as they are seen to only be representatives of specific performance

indicators.

This work aims to provide a more systematic approach with the goal of evaluating benchmarks

targeting the memory subsystem, looking at capacity latency and bandwidth.

Problem statement:

How can benchmarks used to assess memory performance, including cache usage, be system-

atically compared amongst each others?

Description:

Benchmarks are an essential tool for performance assessment of HPC systems. During the

procurement process of HPC systems both benchmarks and proxy applications are used to as-

sess the system which is to be procured. With new generations of HPC systems, the selected

proxy application and benchmarks are often exchanged and benchmarks for specific needs of

the system are selected. Only a few of these have stayed persistent over longer time periods. At

the same time the quality of benchmarks is typically not questioned as they are seen to only be

representatives of specific performance indicators.

This work targets to provide a more systematic approach with the goal of evaluating bench-

marks targeting Network performance, namely regarding MPI (Message Passing Interface) in

both functional test as well as for benchmark applications.

Problem statement:

How can benchmarks used to assess Network performance, using MPI routines, be systemati-

cally compared amongst each others?

Description:

Benchmarks are an essential tool for performance assessment of HPC systems. During the pro-

curement process of HPC systems both benchmarks and proxy applications are used to assess

the system which is to be procured. New generations of HPC systems often serve the current

and evolving needs of the applications for which the system is procured. Therefore, with new

generations of HPC systems, the selected proxy application and benchmarks to assess the sys-

tems’ performance are also selected for the specific needs of the system. Only a few of these

have stayed persistent over longer time periods. At the same time the quality of benchmarks

is typically not questioned as they are seen to only be representatives of specific performance

indicators.

This work aims to evaluate benchmarks for input and output (I/O) performance to provide a

systematic approach to evaluate benchmarks targeting read and write performance of different

characteristics as seen in application behavior, mimiced by benchmarks.

Problem statement:

How can benchmarks used to assess I/O performance be systematically compared amongst

each others?

Memory Management and Optimizations on Heterogeneous HPC Architectures

GPUScout is a performance analysis tool developed at TUM that performs analyses of NVidia CUDA kernels with the aim to identify common pitfalls related to data movements on a GPU. It combines static SASS code analysis, PC stall sampling, and NCU metrics collection to identify the bottlenecks, assess its severity, and provide additional information about the identified code section. As of now, it presents its findings in a textual form, printed in the terminal.

The goal of this thesis is to explore the options of extending the support of GPUscout to AMD GPUs. This includes evaluating a strategy how to interact with the APIs and debug interfaces available on AMD GPUs, and how to use these to provide insight about the source code, which includes the static code analysis, combined with some kind of metrics or sampling mechanism, with the goal to provide information about the bottlenecks in the kernel, about its location in the source code, and its severity, as indicated by the metrics. Depending on the available profiling and debugging tools and interfaces support from the AMD side, the solution may be more or less similar to the current GPUscout logic. As a GR, this work can be focussed on the research of the functionality and stay more in the design/PoC phase.

Contact:

In case of interest, please contact Stepan Vanecek (stepan.vanecek@tum.de) at the Chair for Computer Architecture and Parallel Systems (Prof. Schulz) and attach your CV & transcript of records.

Published on 25.1.2024 (30)

GPUScout is a performance analysis tool developed at TUM that performs analyses of NVidia CUDA kernels with the aim to identify common pitfalls related to data movements on a GPU. It combines static SASS code analysis, PC stall sampling, and NCU metrics collection to identify the bottlenecks, assess its severity, and provide additional information about the identified code section. As of now, it presents its findings in a textual form, printed in the terminal. The output provides all necessary information, however the way of providing the information should be more user-friendly.

The goal of this work is to design a user interface, which offers users greater support in identifying GPU-based memory-related bottlenecks, and also supports the users with the process of mitigating these bottlenecks. We will develop a concept of what information and in which form should be presented, and will implement a prototype to verify the concept. An example optimization procedure will be conducted to showcase the effectiveness of the implemented frontend.

Contact:

In case of interest, please contact Stepan Vanecek (stepan.vanecek@tum.de) at the Chair for Computer Architecture and Parallel Systems (Prof. Schulz) and attach your CV & transcript of records.

Updated on 25.1.2024 (24)

Background:

The DEEP-SEA(https://www.deep-projects.eu) project is a joint European effort of ca. a dozen leading universities and research institutions on developing software for coming Exascale supercomputing architectures. CAPS TUM, as a member of the project, is responsible for development of an environment for analyzing application and system performance in terms of data movements. Data movements are very costly compared to computation capabilities. Therefore, suboptimal memory access patterns in an application can have a huge negative impact on the overall performance. Contrarily, analyzing and optimizing the data movements can increase the overall performance of parallel applications massively.

We develop a toolchain with the goal to create a full in-depth analysis of a memory-related application behaviour. It consists of tools Mitos(https://github.com/caps-tum/mitos), sys-sage(https://github.com/caps-tum/sys-sage), and MemAxes(https://github.com/caps-tum/MemAxes). Mitos collects the information about memory accesses, sys-sage captures the memory and compute topology and capabilities of a system, and provides a link between the hardware and the performance data, and finally, MemAxes analyzes and visualizes outputs of the aforementioned projects.

There is an existing PoC of these tools, and we plan on extending and improving the projects massively to fit the needs of state-of-the-art and future HPC systems, which are expected to be the core of upcoming Exascale supercomputers. Our work and research touches modern heterogeneous architectures, patterns, and designs, and aims at enabling the users to run extreme-scale applications with utilizing as much of the underlying hardware as possible.

Context:

- The current implementation of Mitos/MemAxes collects PEBS samples of memory accesses (via perf), i.e. every n-th memory operation is measured and stored.

- Collecting aggregate data alongside with PEBS samples could help increase the overall understanding of the system and application behaviour.

Tasks/Goals:

- Analyse what aggregate data are meaningful and possible to collect (total traffic, BW utilization, num LD/ST, ...?) and how to collect them (papi? likwid? perf?)

- Ensure that these measurements don't interfere with the existing collection of PEBS samples.

- Design and implement a low-overehad solution.

- Find a way to visualise/present the data in MemAxes tool (or different visualisation tool if MemAxes is not suitable.

- Finally, present how the newly collected data help the users to understand the system or hint the user if/how to do optimizations.

Contact:

In case of interest, please contact Stepan Vanecek (stepan.vanecek@tum.de) at the Chair for Computer Architecture and Parallel Systems (Prof. Schulz).

Updated on 12.09.2022

Modern server-grade CPU chips often exceed 100 cores per chip, which necessitates a fast inter-core network. The ARM Coherent Mesh Network (CMN) is a mesh-based on-chip network and is used on a 128-core ARM processor (Ampere Altra Max). In previous works at CAPS, the initial topology and component placement (CPU cores, memory controllers, L3 cache controllers, etc.) has been determined and it was shown that for example latency-sensitive applications benefit from being scheduled topologically close to the L3 cache controllers.

The goal of this Guided Research (potentially also Master Thesis) is to further explore the CMN and its impact on workload performance. Specifically, this work includes two steps:

1. Determine Mapping between L3 Cache Controllers and Physical Memory Addresses: Any memory access eventually reaching the L3 cache is handled by one of the CMN cache controllers. As they are located all over the CMN, some CPU cores have a longer routing path to the cache controller handling the current cacheline than others. Knowing the mapping between physical memory addresses and L3 cache controllers could allow for applications to minimise L3 cache routing.

2. Use Mapping to Optimise Core-to-Core Communication: Multi-core applications often communicate via shared memory, for example using OpenMP, and therefore utilise L3 cache. Given a latency-sensitive code, such as a hot loop containing a synchronisation call, minimising the L3 cache line exchange could measurably improve performance. Initially, this mapping should be exploided using a synthetic benchmark. Later on, the goal is to implement a CMN-aware OpenMP Barrier call, although this step is optional.

Contact:

If you are interested, then please contact Philipp Friese (\<firstname>.\<lastname>@tum.de) at the Chair of Computer Architecture and Parallel Systems (Prof. Schulz). Please also attach your transcript of records and CV.

Published on 29.05.2024

Context:

- HWloc gives a good overview of CPU memory hierarchy. This information is used by the sys-sage library and represents the CPU there.

- It only provides very little information about memory and compute unit hierarchy/grouping on GPUs. Therefore, we need to find a different way to gather these data.

- We have developed a first version of a microbenchmark-based approach to obtain the compute and memory hierarchy information on NVidia GPUs. (https://github.com/caps-tum/mt4g)

Tasks/Goals:

- The goal of this work is to extend the existing approach, which works for NVidia GPUs, to AMD GPUs. (or come up with your own approach)

- As a part of this work, you will have to make sure the architectural differences between the vendors are covered.

- (optional) Make sure your data can get uploaded to sys-sage (i.e. update the existing data parser), so that the users can have a view of the whole heterogeneous node.

- For MA, the work will also include comparing the obtained information about AMD and NVidia GPUs, i.e. providing an analysis of the architectural differences between the GPU vendors.

Contact:

In case of interest, please contact Stepan Vanecek (stepan.vanecek@tum.de) at the Chair for Computer Architecture and Parallel Systems (Prof. Schulz).

Updated on 6.6.2024 (21)

Various MPI-Related Topics

Please Note: MPI is a high performance programming model and communication library designed for HPC applications. It is designed and standardised by the members of the MPI-Forum, which includes various research, academic and industrial institutions. The current chair of the MPI-Forum is Prof. Dr. Martin Schulz. The following topics are all available as Master's Thesis and Guided Research. They will be advised and supervised by Prof. Dr. Martin Schulz himself, with help of researches from the chair. If you are very familiar with MPI and parallel programming, please don't hesitate to drop a mail to Prof. Dr. Martin Schulz. These topics are mostly related to current research and active discussions in the MPI-Forum, which are subject of standardisation in the next years. Your contribution achieved in these topics may make you become contributor to the MPI-Standard, and your implementation may become a part of the code base of OpenMPI. Many of these topics require a collaboration with other MPI-Research bodies, such as the Lawrence Livermore National Laboratories and Innovative Computing Laboratory. Some of these topics may require you to attend MPI-Forum Meetings which is at late afternoon (due to time synchronisation worldwide). Generally, these advanced topics may require more effort to understand and may be more time consuming - but they are more prestigious, too.

LAIK is a new programming abstraction developed at LRR-TUM

- Decouple data decompositionand computation, while hiding communication

- Applications work on index spaces

- Mapping of index spaces to nodes can be adaptive at runtime

- Goal: dynamic process management and fault tolerance

- Current status: works on standard MPI, but no dynamic support

Task 1: Port LAIK to Elastic MPI

- New model developed locally that allows process additions and removal

- Should be very straightforward

Task 2: Port LAIK to ULFM

- Proposed MPI FT Standard for “shrinking” recovery, prototype available

- Requires refactoring of code and evaluation of ULFM

Task 3: Compare performance with direct implementations of same models on MLEM

- Medical image reconstruction code

- Requires porting MLEM to both Elastic MPI and ULFM

Task 4: Comprehensive Evaluation

ULFM (User-Level Fault Mitigation) is the current proposal for MPI Fault Tolerance

- Failures make communicators unusable

- Once detected, communicators an be “shrunk”

- Detection is active and synchronous by capturing error codes

- Shrinking is collective, typically after a global agreement

- Problem: can lead to deadlocks

Alternative idea

- Make shrinking lazy and with that non-collective

- New, smaller communicators are created on the fly

Tasks:

- Formalize non-collective shrinking idea

- Propose API modifications to ULFM

- Implement prototype in Open MPI

- Evaluate performance

- Create proposal that can be discussed in the MPI forum

ULFM works on the classic MPI assumptions

- Complete communicator must be working

- No holes in the rank space are allowed

- Collectives always work on all processes

Alternative: break these assumptions

- A failure creates communicator with a hole

- Point to point operations work as usual

- Collectives work (after acknowledgement) on reduced process set

Tasks:

- Formalize“hole-y” shrinking

- Proposenew API

- Implement prototype in Open MPI

- Evaluate performance

- Create proposal that can be discussed in the MPI Forum

With MPI 3.1, MPI added a second tools interface: MPI_T

- Access to internal variables

- Query, read, write

- Performance and configuration information

- Missing: event information using callbacks

- New proposal in the MPI Forum (driven by RWTH Aachen)

- Add event support to MPI_T

- Proposal is rather complete

Tasks:

- Implement prototype in either Open MPI or MVAPICH

- Identify a series of events that are of interest

- Message queuing, memory allocation, transient faults, …

- Implement events for these through MPI_T

- Develop tool using MPI_T to write events into a common trace format

- Performance evaluation

Possible collaboration with RWTH Aachen

PMIxis a proposed resource management layer for runtimes (for Exascale)

- Enables MPI runtime to communicate with resource managers

- Come out of previous PMI efforts as well as the Open MPI community

- Under active development / prototype available on Open MPI

Tasks:

- Implement PMIx on top of MPICH or MVAPICH

- Integrate PMIx into SLURM

- Evaluate implementation and compare to Open MPI implementation

- Assess and possible extend interfaces for tools

- Query process sets

MPI was originally intended as runtime support not as end user API

- Several other programming models use it that way

- However, often not first choice due to performance reasons

- Especially task/actor based models require more asynchrony

Question: can more asynchronmodels be added to MPI

- Example: active messages

Tasks:

- Understand communication modes in an asynchronmodel

- Charm++: actor based (UIUC)•Legion: task based (Stanford, LANL)

- Propose extensions to MPI that capture this model better

- Implement prototype in Open MPI or MVAPICH

- Evaluation and Documentation

Possible collaboration with LLNL and/or BSC

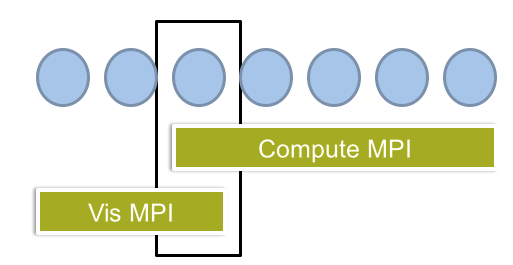

MPI can and should be used for more than Compute

- Could be runtime system for any communication

- Example: traffic to visualization / desktops

Problem:

- Different network requirements and layers

- May require different MPI implementations

- Common protocol is unlikely to be accepted

Idea: can we use a bridge node with two MPIs linked to it

- User should see only two communicators, but same API

Tasks:

- Implement this concept coupling two MPIs

- Open MPI on compute cluster and TCP MPICH to desktop

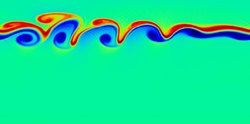

- Demonstrate using on-line visualization streaming to front-end

- Document and provide evaluation

- Warning: likely requires good understanding of linkers and loaders

Field-Programmable Gate Arrays

Field Programmable Gate Arrays (FPGAs) are considered to be the next generation of accelerators. Their advantages reach from improved energy efficiency for machine learning to faster routing decisions in network controllers. If you are interested in one of it, please send your CV and transcript record to the specified Email address.

Our chair offers various topics available in this area:

- Machine Learning: Your tasks will be to focus on implementing different ML algorithms on FPGAs, our main focus is data distillation. (dirk.stober@tum.de)

- Open-Source EDA tools: If you are interested in exploring open-source EDA tools, especially High Level Synthesis, you can do an exploration of available tools. (dirk.stober@tum.de)

- Memory on FPGA: Exploration of memory on FPGA and devolping profiling tools for AXI-Interconnects (dirk.stober@tum.de).

- Quantum Computing: Your tasks will be to explore architectures that harness the power of traditional computer architecture to control quantum operations and flows. Now we focus on superconducting qubits & neutral atoms control. (xiaorang.guo@tum.de)

- Direct network operations: Here, FPGAs are wired closer to the networking hardware itself, hence allows to overcome the network stack which a regular CPU-style communication would be exposed to. Your task would be to investigate FPGAs which can interact with the network closer than CPU-based approaches. ( martin.schreiber@tum.de )

- Linear algebra: Your task would be to explore strategies to accelerate existing linear algebra routines on FPGA systems by taking into account applications requirements. ( martin.schreiber@tum.de )

- Varying accuracy of computations: The granularity of current floating-point computations is 16, 32, or 64 bit. Your work would be on tailoring the accuracy of computations towards what's really required. ( martin.schreiber@tum.de )

- ODE solver: You would work on an automatic toolchain for solving ODEs originating from computational biology. ( martin.schreiber@tum.de )

Background

The Qubit control system bridges the quantum software stack and the physical backend. Typically, it includes a quantum control processor, required memory, and a signal generator (with QICK, for example). So far, qubits are mainly controlled by radio frequency waveforms, but current control technologies based on commercial AWG (arbitrary waveform generator) or FPGA-based DAC/ADC lack scalability and efficiency. With newly published RFSoC FPGAs, we can develop control logic and waveform generators on a single board. However, new systems integrating all things demands additional effort.

Task

1. Study the current system architecture of qubit control and identify which part/interface we need and can improve.

2. Optimize the current control processor in terms of frequency and ISA development.

3. Integration of the control processor with signal generators, and additional memory design may be necessary.

As this task is complex, we will split this general focus into multiple sub-tasks. They can be adjusted to the student's education status (BSc/MSc) and level of expertise in the area.

Topics in this area may be collaborated with Fraunhofer or MPQ.

Requirement

Experience in programming with VHDL/Verilog.

Contact:

In case of interest or any questions, please contact Xiaorang Guo (xiaorang.guo@tum.de) at the Chair for Computer Architecture and Parallel Systems (Prof. Schulz) and attach your CV & transcript of records.

Background: Neutral atoms show great promise as qubit candidates for fully controllable and scalable quantum computers. Yet, the creation of a two-dimensional, defect-free atomic array (sorting) within a short time frame remains a significant challenge. This thesis explores the use of FPGA-based acceleration designs to reduce the time overhead of the sorting process, release the full potential of quantum advantages."

Thesis Goal: The objective of this thesis is to design a sorting unit on an FPGA board using Verilog or High-Level Synthesis (HLS) to achieve significant acceleration when compared to CPU/GPU implementations. The design should prioritize low latency and high parallelization to enhance the overall efficiency of the sorting process.

Contact: Xiaorang Guo(xiaorang.guo@tum.de), Jonas Winklmann (jonas.winklmann@tum.de), Prof. Martin Schulz

Various Thesis Topics in Collaboration with Leibniz Supercomputing Centre

We have a variety of open topics. Get in contact with Josef Weidendorfer or Amir Raoofy

Description:

The communication framework employed within MPI runtime environments considers various communication modes for message transmission such as fully asynchronous, eager, or synchronous. Switching between these modes can be represented by a piece-wise linear model with flexibility in the number of pieces. However, while this modeling approach is suitable for remote communication across distinct nodes, it does not cover local communications where processes utilize shared memory rather than network cards. In such cases, significant different performances and slight different behaviours are common due to using different communication protocols.

This open thesis gives the students the opportunity to employ modeling and simulation methodologies to analyze, anticipate, and enhance the efficiency of MPI communication within shared memory.

Tasks:

- Literature review: Conducting a literature review on high-performance computing (HPC) simulators, particularly those focused on studying the performance of MPI communication on complex platforms. Based on the literature review, identifying the optimal simulator is expected.

- Communication Analysis: Analyze MPI communication in shared memory architectures using benchmarking tools and performance profiling techniques.

- Model Development: Develop mathematical models to represent the performance characteristics of MPI shared memory communication.

- Validation and Evaluation: Validate the proposed models through simulation experiments and empirical studies on HPC clusters with shared memory configurations.

Requirements:

- Good background in parallel computing and HPC systems.

- Proficiency in C/C++ programming languages.

- Experience with parallel computing frameworks (MPI).

Contact:

ehab.saleh@lrz.de

Benchmarking of (Industrial-) IoT & Message-Oriented Middleware

DDS (Data Distribution Service) is a message-oriented middleware standard that is being evaluated at the chair. We develop and maintain DDS-Perf, a cross-vendor benchmarking tool. As part of this work, several open theses regarding DDS and/or benchmarking in general are currently available. This work is part of an industry cooperation with Siemens.

Please see the following page for currently open positions here.

Note: If you are conducting industry or academic research on DDS and are interested in collaborations, please see check the open positions above or contact Vincent Bode directly.

Applied mathematics & high-performance computing

There are various topics available in the area bridging applied mathematics and high-performance computing. Please note that this will be supervised externally by Prof. Dr. Martin Schreiber (a former member of this chair, now at Université Grenoble Alpes).

This is just a selection of some topics to give some inspiration:

(MA=Master in Math/CS, CSE=Comput. Sc. and Engin.)

- HPC tools:

- Automated Application Performance Characteristics Extraction

- Portable performance assessment for programs with flat performance profile, BA, MA, CSE

- Projects targeting Weather (and climate) forecasting

- Implementation and performance assessment of ML-SDC/PFASST in OpenIFS (collaboration with the European Center for Medium-Range Weather Forecast), CSE, MA

- Efficient realization of fast Associated Legendre transformations on GPUs (collaboration with the European Center for Medium-Range Weather Forecast), CSE, MA

- Fast exponential and implicit time integration, BA, MA, CSE

- MPI parallelization for the SWEET research software, MA, CSE

- Semi-Lagrangian methods with Parareal, CSE, MA

- Non-interpolating Semi-Lagrangian Schemes, CSE, MA

- Time-splitting methods for exponential integrators, CSE, MA

- Machine learning for non-linear time integration, CSE, MA

-

Exponential integrators and higher-order Semi-Lagrangian methods

- Ocean simulations:

- Porting the NEMO ocean simulation framework to GPUs with a source-to-source compiler

- Porting the Croco ocean simulation framework to GPUs with a source-to-source compiler

- Health science project: Biological parameter optimization

- Extending a domain-specific language with time integration methods

- Performance assessment and improvements for different hardware backends (GPUs / FPGAs / CPUs)

If you're interested in any of these projects or if you search for projects in this area, please drop me an Email for further information

AI or Deep Learning Related Topics

The thesis detail can be find in here.

Requirement:

- Hands on experience with deep learning, such as have taken related courses, participated in related projects, or related Hiwi experience.

- Interests in AI application on different edge devices.

- Familiar with Python framework, such as Pytorch.

- Familiar with system profiling tools, such as perf.

Contact: dai.liu@tum.de

Background:

A lot of projects or researches are facing problems with training huge amount of data. the topic Dataset distillation can help to significantly reduce the number of data aiming the same training performance. But implement Dataset Distillation on Edge devices, such as Jetson Nano, Jetson Xavier without GPU pose challenges. In this thesis, we aim to implemenet Dataset Distillation on edge devices with memory constraint.

Requirement:

- Hands on experience with deep learning, such as have taken related courses, participated in related projects, or related Hiwi experience.

- Interests in dataset compression topics and open-minded to new advanced research.

- Familiar with Python framework, such as Pytorch, Keras.

Details will be further explaned, please contact dai.liu@tum.de

If you have interests regarding the following topics, and would like to know how to implement efficient AI or how to implement AI on different hardware setups, such as:

- DL Application on Heteragenous system

- Network Compression

- ML and Architecture

- AI for Quantum

- AI on Hardware (e.g. restricted edge devices, Cerebras)

Please feel free to contact dai.liu@tum.de for MA, BA, GR.