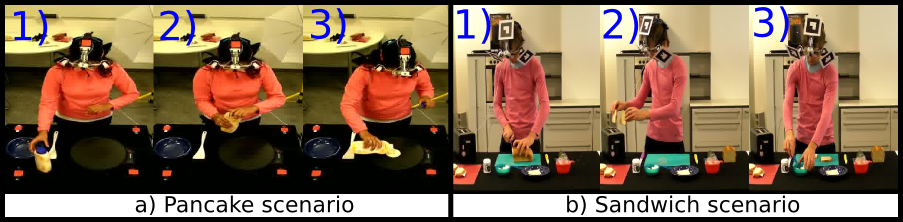

Cooking data set

Welcome our cooking data sets, which explore more realistic scenarios, such as making pancakes or making sandwiches for the reason that in these scenarios several goal-directed movements can be observed,which could be typically perceived in such natural environments.

Please, if you are using this data set, we would be very grateful if you could cite one of our papers:

If you are using the external cameras in combination with the gaze cameras, please cite the paper:

"Added Value of Gaze-Exploiting Semantic Representation to Allow Robots Inferring Human Behaviors". Karinne Ramirez-Amaro, Humera Noor Minhas, Michael Zehetleitner, Michael Beetz , Gordon Cheng. ACM Transactions on Interactive Intelligent Systems (TiiS) archive Volume 7 Issue 1, March 2017 Article No. 5.

If you are using the three external cameras, please cite the paper:

"Enhancing Human Action Recognition through Spatio-temporal Feature Learning and Semantic Rules. Karinne Ramirez Amaro, Eun-Sol Kim, Jiseob Kim, Byoung-Tak Zhang, Michael Beetz, Gordon Cheng. Humanoid Robots, 2013, 13th IEEE-RAS International Conference, October 2013."

If you are using one external camera, please cite the paper:

"Understanding Human Activities from Observation via Semantic Reasoning for Humanoid Robots. Karinne Ramirez Amaro, Michael Beetz and Gordon Cheng. 2014 IROS Workshop on AI and Robotics. IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2014), 14-18 Sept. 2014. Chicago, Illinois, USA."

All details and the data are available for images and videos.

Acknowledgments

The presented data set was obtained in collaboration with:

Dr. Humera Noor Minhas PD