Career and Jobs

For Graduates & Professionals

Student Projects & Jobs

Multifingered Robot Hand Simulation and Control

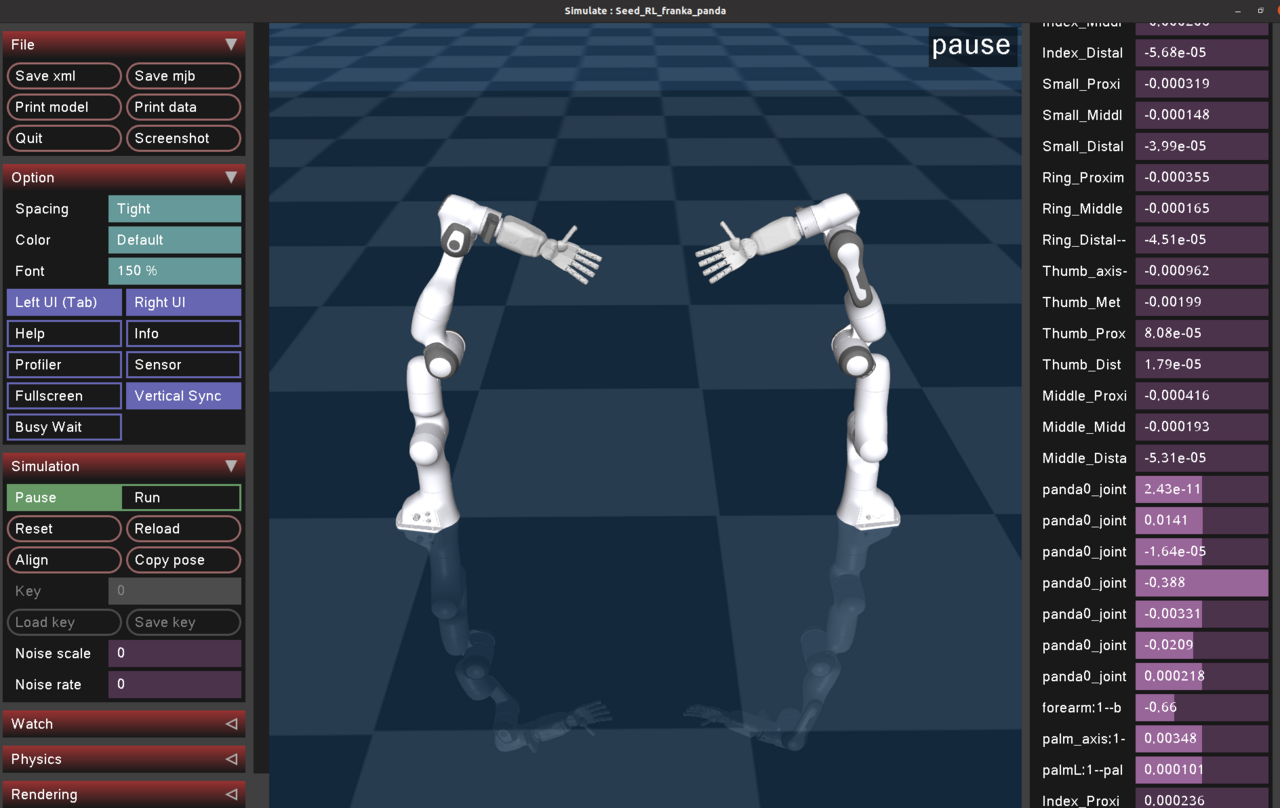

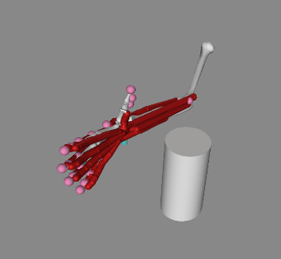

Being able to achieve human-like hand manipulation dexterity is one of the core aims in robotics. Therefore, the development and control of robot hands has become one of the main research topics in recent years. In an attempt to expedite the progress on robot hand manipulation, researchers have benefited from simulated environments. This is mainly because building and controlling real-world robot hands is an arduous task.

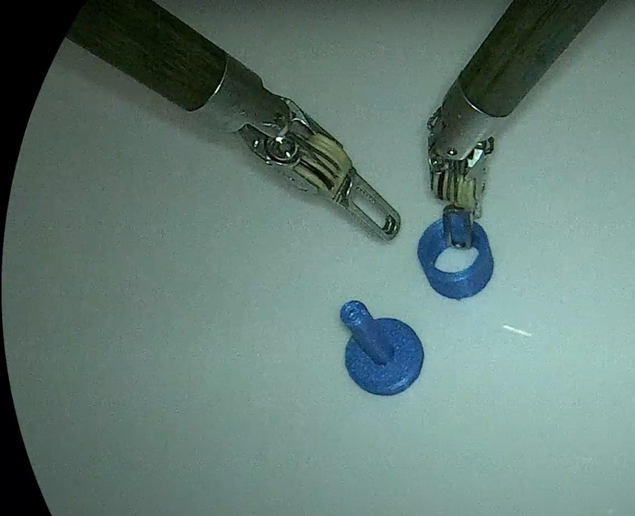

Following this line of thought, as part of the aims of MIRMI, we would like to analyze the capabilities of a well-known simulation environment in robotics, namely, Mujoco. Specifically, we would like to test its capabilities on simulating robot hand motions and dynamic interactions with objects. For this, we plan to simulate different robot hand models, starting with the Seed Robotics RH8D hand. The simulation results will be compared with real world scenarios using an actual RH8D hand, which is available in our institute.

Type: Forschungspraxis, Student Project

Requirements for Students

- Knowledge about:

- Basic concepts in robotics

- Control systems

- Basic modeling of physical systems

- Programming skills:

- Python or C++

- You should be familiar with Ubuntu

Objectives of Work

- Understand Mujoco’s environment and functionality

- Understand the provided scripts and adjust them depending on testing requirements

- Test Mujoco’s capabilities extensively in different manipulation scenarios

- Compare simulated results to real life scenarios and adjust simulation accordingly.

What can you expect from us?

- An environment where your ideas will be welcome and discussed in detail

- Freedom with your work schedule

- Access to our laboratories, machines and materials

- A suitable working space

- You can get to know our research directions within the field of dexterous manipulation with robot hands.

Contact:

If you are interested in the topic and want to know more, feel free to contact us:

Diego Hidalgo (diego.hidalgo-carvajal@tum.de)

Chair of Robotics Science and Systems Intelligence, Munich Institute of Robotics and Machine Intelligence (MIRMI)

Human-Robot Interaction

Research Internship + Master Thesis (2 offers)

Research description

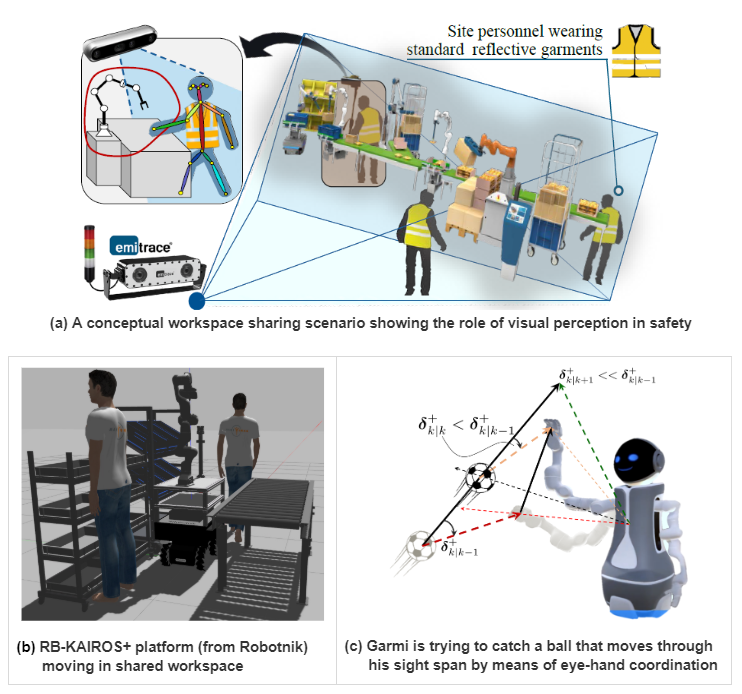

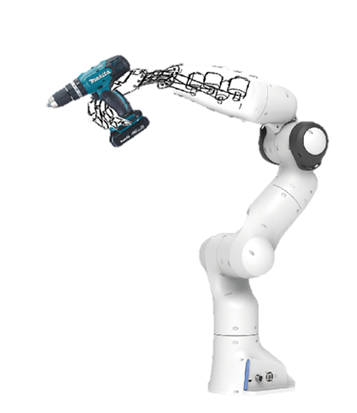

A critical factor in High-Mix Low-Volume (HMLV) manufacturing is the precise flow of material and how to manufacture unique and more complex, tailored products with specific quality at maximum efficiency while constrained by safety requirements. Although very promising as a flexible component in intralogistics chains, robotics has not yet found its way into agile HMLV production. Especially for operation alongside humans, current robots lack the required high degree of flexibility, capability, cost-effectiveness and above all safety certification. Developed robotic solutions must meet many objectives in terms of efficiency in manipulation and human-robot co-production, among which are the following

- Risk-aware task scheduling and safe motion planning

- Dynamic whole-body control/manipulation capabilities

- Autonomous operation with human-like performance

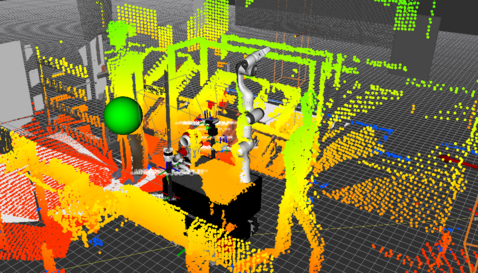

In future collaborative manufacturing workcells, robots are expected to work alongside humans as coworkers. This enables fusing their individual competences as one team in order to complete different tasks. To develop and implement such collaborative applications, human safety is the top priority, whereas avoiding the robot-to-human undesired collisions and coordinating intentional contacts interactions is a key requirement. For this, the location of humans and ideally their limbs, as well as speed of movement have to be reliably known in real time. Locating and ensuring the safety of a human coworker collaborating with robots in dynamic, largely unpredictable environments usually relies on the use of exteroceptive sensors. Specifically, state-of-the-art vision-based solutions for safe pHRI rely on at least a measure of the distance between robot and human.

Possible topics to be addressed within the scope of the offered internship+thesis:

- Sensor fusion techniques for workspace monitoring and collaborative operation

- Hand-eye continuous calibration for collaborative manipulation

- Human skeleton tracking and pose estimation for mobile manipulation systems

Prerequisites:

- Master-level studies in Electrical Engineering, Electronics, Computer Science

- Experience with OpenCV, Kalman filtering and sensor fusion techniques

- Practical experience with ROS (previous projects with Franka Emika robot arm is a plus)

- Excellent C++ programming skills

- Proficiency with Matlab/Simulink

- Ability to work well structured and organized

Application Deadline: 15.04.2023

Contact:

- Mazin Hamad, M.Sc. (mazin.hamad@tum.de)

- Dr. Saeed Abdolshah (saeed.abdolshah@tum.de)

Chair of Robotics Science and Systems Intelligence, Munich Institute of Robotics and Machine Intelligence (MIRMI)

Robot Control

The objective of this 6-month master's thesis is to develop a novel framework for calibrating joint torque sensors of serial manipulators. The (re-)calibration of joint side torque sensors is crucial for safety, particularly as robots and humans increasingly operate in shared environments. Recalibrating an assembled robotic manipulator requires precise knowledge of its kinematic and dynamic model, a task already addressed in previous research regarding inertial parameter identification of serial manipulators. The final goal is to introduce an "off-calibration" score indicating the current calibration status of the arm and the need for recalibration. Additionally, if recalibration is necessary, the novel recalibration framework can be utilized to update sensor parameters.

The main task would be:

- Understanding the existing inertial identification framework;

- Literature research on calibration frameworks for robotic manipulators;

- Developing and prototyping the novel framework in simulation;

- Come up with scenarios to test the overall system;

- Implementation of a real robotic system.

Pre-requisites:

- Experience with prototyping in Matlab, Python and C++;

- Basics of Manipulator Kinematics and Dynamics;

- Basic working knowledge of Manipulator Control;

Helpful but not required:

- Knowledge of inertial parameter identification, sensor calibration and running simple controllers on robots

For more information, please contact:

Mario Tröbinger (mario.troebinger@tum.de)

Project-Typ: Forschungspraxis/Ingenieurspraxis (possible thesis extension) New application deadline: March 31, 2024

Efficient and fast manipulation is still a big challenge in the robotics community. Traditionally, generating fast motion requires scaling of actuator power. Recently, however, more attention has been paid to “additional ways” for mechanical energy storage and release in order to keep the actuator power requirements baseline lower (just enough to satisfy general manipulation requirements). To introduce “fast mode” manipulation energy could “be injected” from mechanical elements present in the system. It can be useful for tasks such as throwing or other explosive maneuvers.

Bi-Stiffness Actuation (BSA) concept [1] is the physical realization of the previously mentioned idea. There, a switch-and-hold mechanism is used for full link decoupling while simultaneously breaking the spring element (allowing controlled storage and energy release). Changing modes within the actuator (clutch engagement and disengagement) is followed by the impulsive switch of dynamics.

Students are expected to study and understand the physical and mathematical representations of developed concepts. Apply and gain an understanding of multi-DoF manipulator systems, their control, and classifications. Further, using the state-of-the-art simulation frameworks develop a codebase for its representation. The work will be foundational for further research, thus the student is expected to follow best coding practices and document his work.

The student is expected to work on the simulation of the BSA concept. For simplification, one of the modes of BSA can be modeled as a series elastic actuator (SEA). The first step would be to modify rigid robot representation, such that it includes elasticity in joints (modeled as SEA). Implementation details can be found in [2] and the project code in [3] (not necessary to use the same framework for Simulation and Dynamics). Further, dynamics should be extended to handle other modes of BSA as well as impulsive switches between them.

The result should be a usable code base with a minimal reproducible example of a Manipulator executing a throwing maneuver exploiting elastic elements and impulsive mode switches. The simulation will be verified against Matlab implementation (already developed)

Requirements from candidates:

- Knowledge of Matlab, C++, Python

- Working skills in Ubuntu operating system

- Familiarity with ROS

- Robotics (Forward, backward dynamics and kinematics)

- Proficiency in English C1, reading academic papers

- Plus are:

- Knowledge of working with Gazebo/MuJoCo

- Familiarity with GIT

- DesignPatterns for coding

- Familiarity with Docker

- Googletest (or other testing framework)

Otherwise, the work is to be divided among two students. One student will be working in MuJoCo1 or similar (to be decided), the other will develop a simulation for GPU-accelerated computing, using Isaac Gym [4] or a similar engine.

[1] Ossadnik, Dennis, et al. "BSA-Bi-Stiffness Actuation for optimally exploiting intrinsic compliance and inertial coupling effects in elastic joint robots." 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2022.

[2] Mengacci, R., Zambella, G., Grioli, G., Caporale, D., Catalano, M., & Bicchi, A. (2021). An Open-Source ROS-Gazebo Toolbox for Simulating Robots With Compliant Actuators. Frontiers in Robotics and AI, 8.

[3] ROS-Gazebo-compliant-actuators-plugin https://github.com/NMMI/ROS-Gazebo-compliant-actuators-plugin/tree/master

[4] NVIDIA Isaac Sim https://developer.nvidia.com/isaac-sim

To apply, you can send your CV, and short motivation to the Supervisor (with the Senior Supervisor in cc)

Supervisor M.Sc. Vasilije Rakcevic

Senior Supervisor Dr.-Ing. Abdalla Swikir

Project-Typ: Forschungspraxis/Ingenieurspraxis (possible thesis extension)

Background:

In this task, you will investigate the effects of packet loss on robotic control performance. Robotic control involves the use of computers to control the movements of robots. Packet loss occurs when data packets are lost or dropped during transmission over a network, which can have a significant impact on the performance of robotic control systems.

Possible Workpackages:

- Migrating existing robot control software stack to QNX (hard-realtime system) to establish a baseline performance.

- Simulating package loss, establishing performance metrics and implementing various package loss mitigation measures.

- Experiments on physical robotic systems including Franka Emika robots and joints.

Prerequisites:

C/C++

Network Communication

Fieldbus experience (preferred)

Contact:

Lingyun Chen

Project-Typ: Forschungspraxis/Ingenieurspraxis

Background:

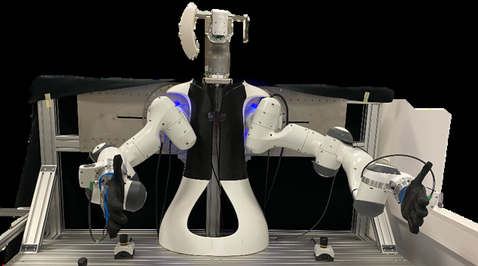

The targeted hand-arm system includes a Panda robot arm and a tendon-driven anthropomorphic hand. A control framework is required to interface both the robot and the hand. The arm and the hand are controlled separately with libfranka (generic network interface) and EtherCAT software stack (with Matlab Simulink). To enable sequential or further unified control of the arm and hand, the control framework should be able to handle real-time data exchange between the libfranka and EtherCAT Fieldbus. The control framework should also provide APIs and telemetry functions for high-level control algorithms and visualization.

Possible Workpackages:

- Develop a real-time capable data exchange program between libfranka and EtherCAT software stack.

- Basic forward and inverse kinematic solvers for acquiring Cartesian poses for hand joints or controlled joint poses.

- Develop the APIs and telemetry functions for external communication and visualization.

Prerequisites:

C++

Matlab Simulink

Fieldbus experience (EtherCAT preferred)

Contact:

Lingyun Chen

Type: Forschungspraxis, Master thesis

Motivation:

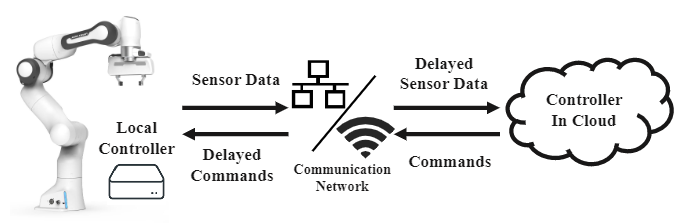

As robots become more and more intelligent, the complexity of the algorithms behind them is increasing. Since these algorithms require high computation power from the onboard robot controller, the weight of the robot and energy consumption increases. A promising solution to tackle this issue is to relocate the expensive computation to the cloud. However, due to the non-passivity of the communication channel, relocating the high-frequency controller will cause instability of the robot. To passivify such a system under communication network with variable time delay, we proposed a framework using Time Domain Passivity Approach (TDPA).

However, the original TDPA method is very conservative, thus the performance of the position tracking is not satisfying. In this work, we would like to improve the performance of such a system.

Tasks:

- Literature research on related algorithms

- Implement one of the TDPA methods to reduce the conservativeness

- Proposing a new method if possible

Prerequisites

- Interest in robot programming

- C++ programming skills

- Basic robot control knowledge

- Creative and independent thinker

Reference

[1] Schwarting W, Pierson A, Alonso-Mora J, et al. Social behavior for autonomous vehicles[J]. Proceedings of the National Academy of Sciences, 2019, 116(50): 24972-24978.

[2] X.Chen, H. Sadeghian, L. Chen, M. Tröbinger, A. Swirkir, A. Naceri and S. Haddadin, “A Passivity-based Approach on Relocating High-Frequency Robot Controller to the Edge Cloud”, 2023 IEEE International Conference on Robotics and Automation (ICRA)

[3] Ryu J H, Artigas J, Preusche C. A passive bilateral control scheme for a teleoperator with time-varying communication delay[J]. Mechatronics, 2010, 20(7): 812-823.

[4] H. Singh, A. Jafari and J. -H. Ryu, "Enhancing the Force Transparency of Time Domain Passivity Approach: Observer-Based Gradient Controller," 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 2019, pp. 1583-1589, doi: 10.1109/ICRA.2019.8793902.

[5] Panzirsch M, Ryu J H, Ferre M. Reducing the conservatism of the time domain passivity approach through consideration of energy reflection in delayed coupled network systems[J]. Mechatronics, 2019, 58: 58-69.

Contact:

xiaoyu.chen@tum.de

hamid.sadeghian@tum.de

Telepresence

Project-Typ: Forschungspraxis/Ingenieurspraxis

Background:

The targeted hand-arm system includes a Panda robot arm and a tendon-driven anthropomorphic hand. The traditional way of controlling the hand-arm system usually requires trajectory planning on a rather complex hierarchical structure. Motion mapping by directly using human experience/teaching to control the hand-arm system can enable effective and intuitive control. And also to provide future usage applications on the telepresence aspect.

Possible Workpackages:

- Interface for receiving and parsing messages from the real-time motion capture system (Mocap suit, Vicon or HTC Vive).

- Motion mapping algorithm for converting captured human motion to high-level control commands to the hand-arm system.

- Develop a digital twin of the human operator and the hand-arm system.

Prerequisites:

C++

Network Communication

Robot Control

Contact:

Lingyun Chen

Type: Forschungspraxis, Master thesis

Motivation:

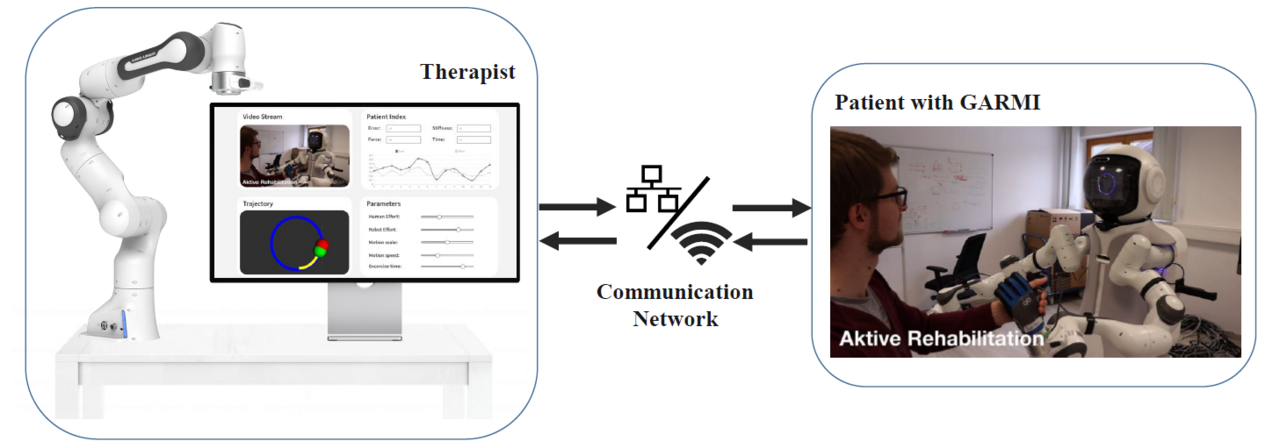

Robots plays an important role in future rehabilitation, because of its ability in repeating motions and its increasing reachability. The aim of this research is to provide a framework for active tele-rehabilitation of upper extremity. It is assumed that the robot, the subject and the remote therapist are going to do a collaborative exercise toward the same goal [2]. The exercise is illustrated on a screen in front of the subject to bring him/her in the control loop of the local robot. The remote station is used to manage this scenario and update the parameters of the system through an interactive tactile sense. Moreover, the motion of the robot can either be programmed by experts or learned through experts’ demonstration. We expect that the remote teaching is a direction for future rehabilitation. A promising approach is Dynamical Systems (DS) which recently emerged as a powerful tool for motion generation and task modeling, thanks to their nice stability properties that guarantee the convergence to an attractor point or a limit cycle, as well as lending themselves well to machine learning frameworks. In rehabilitation, the exercises are usually repeating motions. Thus, Dynamic Movement Primitive (DMP)[2] is suitable in this scenario.

In this work, we will build up the tele-rehabilitation system with GARMI as illustrated in the figure.

Tasks:

- Literature research on related system

- Implement GUI on therapist side

- Implement the trajectory learning using DMP

- Improve the robot controller

Prerequisites:

- Interests in robotics

- Good C++ programming skills

- Knowledge in ROS

- Creative and independent thinker

Reference:

[1] Chen, Xiao; Sadeghian, Hamid; Li, Yanan; Haddadin, Sami, “Tele-rehabilitation of Upper Extremity with GARMI Robot: Concept and Preliminary Results”, 22nd IFAC World Congress, 2023.

[2] Sharifi, Mojtaba, et al. "Cooperative modalities in robotic tele-rehabilitation using nonlinear bilateral impedance control." Control Engineering Practice 67 (2017): 52-63.

[3] S. F. Atashzar, M. Shahbazi, M. Tavakoli and R. V. Patel, "A new passivity-based control technique for safe patient-robot interaction in haptics-enabled rehabilitation systems," 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 2015, pp. 4556-4561, doi: 10.1109/IROS.2015.7354025.

Contact:

xiaoyu.chen@tum.de

hamid.sadeghian@tum.de

Type: Forschungspraxis, Master Thesis

Motivation:

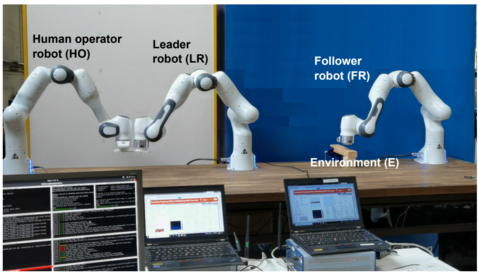

Since the popularization of 5G communication technical, the research area telepresence attracts more and more attention. The COVID pandemic speeds the procedure also up. There are many application scenarios of telepresence such as telemedicine, where the doctor can diagnose a patient at remote place. Another possibility is in the future factory. With such a setup the workers can also work from home.

There are various telepresence systems with different algorithms. The telepresence reference platform is aimed at providing a benchmark for those systems. By providing a standard platform, the system performance with different communication networks, different control algorithms and different environments can be compared.

Tasks:

- Literature research on telepresence systems

- Implementation of different teleoperation algorithms

- Compare the system performance under different conditions

Prerequisites:

- Interest in robot programming

- Excellent C++ programming skills

- Basic robot control knowledge

- Creative and independent thinker

Reference

[1]Chen X., Johannsmeier L., Sadeghian H., Shahriari E., Danneberg M., Nicklas A., Wu F., Fettweis G., Haddadin S. On the Communication Channel in Bilateral Teleoperation: An Experimental Study for Ethernet, WiFi, LTE and 5G, 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), in proceeding

[2] Bauer G, Pan Y J. Review of control methods for upper limb telerehabilitation with robotic exoskeletons[J]. Ieee Access, 2020, 8: 203382-203397.

[3] Balachandran R, Artigas J, Mehmood U, et al. Performance comparison of wave variable transformation and time domain passivity approaches for time-delayed teleoperation: Preliminary results[C]//2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2016: 410-417.

Contact

xiaoyu.chen@tum.de

hamid.sadeghian@tum.de

Type: Forschungspraxis, Master thesis

Motivation:

Learning by demonstration is a popular method to teach robot reaching or repeatable motions. However, demonstrating the motion offline increases the robot downtime. Thus, online adaptation or reprogramming the robot is required. Recent works already shown the on-site tactile skill motion adaptation. However, when the experts cannot be on-site, or the workspace of robot is not safe for human, the remote teaching is required. On the other hand, using a teacher robot arm can help student robot easily distinguish the interaction force with environment and with human.

Dynamical Systems (DS) is recently emerged as a powerful tool for motion generation and task modeling, thanks to their nice stability properties that guarantee the convergence to an attractor point or a limit cycle, as well as lending themselves well to machine learning frameworks. In rehabilitation, the exercises are usually repeating motions. Thus, Dynamic Movement Primitive (DMP)[1] is suitable in this scenario.

In this work, we will extend the state-of-the-art work to tele-teaching framework.

Tasks:

- Literature research on remote teaching using tactile information

- Implement trajectory teaching with DMP

- Encode force profile using DMP

- Implement the progressive automation method to adapt the DMP online

- Extend above work to tele-teaching framework

Prerequisites

- Interest in robot programming

- Excellent C++ programming skills

- Basic robot control knowledge

- Creative and independent thinker

Reference

[1] Ijspeert A J, Nakanishi J, Hoffmann H, et al. Dynamical movement primitives: learning attractor models for motor behaviors[J]. Neural computation, 2013, 25(2): 328-373.

[2] Khoramshahi M, Billard A. A dynamical system approach to task-adaptation in physical human–robot interaction[J]. Autonomous Robots, 2019, 43(4): 927-946.

[3] Karacan K., Sadeghian H., Kirschner R. J., Haddadin S. Passivity-Based Skill Motion Learning in Stiffness-Adaptive Unified Force-Impedance Control, 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), in proceeding

[4] Dimeas F, Kastritsi T, Papageorgiou D, et al. Progressive automation of periodic movements[C]//Human-Friendly Robotics 2019: 12th International Workshop. Springer International Publishing, 2020: 58-72.

Contact

xiaoyu.chen@tum.de

hamid.sadeghian@tum.de

Human modeling

Forschungspraxis/Semester Thesis

Getting more insight into the dexterity and manipulability of the human hand facilitates the development of research fields such as robotic hands and prosthetics. Biomechanical musculoskeletal modeling, as a rapidly growing and promising research topic, aims to capture the essence of the characteristic of the human hand in a mathematical manner. However, to create an accurate subject-specific model of the human hand, several challenges remain.

In this project, you will become familiar with the fundamental concepts of the musculoskeletal hand model and learn how to develop the model to analyze the experimental data.

Tasks:

● Summarizing the existing state of the art OpenSim hand wrist models

● Developing the object-oriented software framework in Matlab using OpenSim API for scaling, forward/inverse dynamic, and etc.

● Developing the interfaces for experimental data such as surface EMG and other robotic toolboxes as visualization

Prerequisites:

● knowledge of rigid body dynamic

● Basic knowledge of biomechanical modeling

● Matlab (C++ is a plus)

● Object-oriented programming

● Experience with OpenSim is a huge plus

Contact:

Junnan Li

junnan.li@tum.de

Deadline:

15.06.2023

Robot Learning

Intern / master’s thesis

Who we are

The TUM Munich Institute of Robotics and Machine Intelligence (MIRMI) is a globally visible interdisciplinary research centre for machine intelligence, which is the integration of robotics, artificial intelligence and perception. Its four central innovation sectors are the future of health, the future of work, the future of the environment, and the future of mobility. More than 60 professors from various TUM faculties cooperate within the framework of MIRMI.

The Robot Learning Lab at MIRMI is part of the chair of Robotics and System Intelligence (Lehrstuhl für Robotik und Systemintelligenz) led by Prof. Sami Haddadin.

What we do

We aim at developing novel methods to make robots capable of learning generalizable and shareable skills in the real world. Our research topics span robot manipulation, physics-informed machine learning, optimal control, and reinforcement learning. Our main research projects at the moment are aligned with the innovation sector of the Future of Work. We focus on developing novel methods and solutions to address open questions in real-world applications, typically in AI-enabled and robotised factories.

Student projects available (winter semester 2023-24)

Topic 1: Visuo-Tactile Robot Manipulation for Tight Clearance Assembly.

Click to download Description

In this work, your research topic will be in 6D pose estimation algorithms and contact-rich manipulation. More specifically:

- Evaluating the performance of the some latest 6D pose estimation algorithms in various scenarios;

- Integrating visual perception into our original tactile insertion skill framework and software architecture;

- Verifying your algorithm with real robot experiments;

- Assist the research activities including experiments, and publications;

- Possible to extend internship period to master thesis and aim at publishing papers in top-tier robotics conference.

Contact: Yansong Wu (Yansong.wu@tum.de), Dr. Fan Wu (f.wu@tum.de)

Topic 2: Synthesize Behavior Trees from Human Demonstrations for Industrial Assembly Tasks

Click here to download description

Student projects available (winter semester 2022-23)

Intern + Thesis

Topic 1: Improving sample efficiency of Evolution Strategies in robot learning

Key word: robot learning, reinforcement learning, evolution strategies

Main work-packages:

- Compare and analyse the performance of different existing methods for generating candidate policies in the sampling stage, eg Covariance Matrix Adaptation (CMA) and Gaussian Mixture Model (GMM)

- Evaluate the use of a critic/ discriminator, using classifier eg SVM or other methods, to filter samples

- Establish a benchmarking program for evaluation using robosuite framework.

- (Optional) research and develop novel technique inspired by counterfactual RL to further improve exploration efficiency and convergence speed

Expected distribution of activities:

Research: ⭐⭐⭐⭐⭐

Software development: ⭐⭐⭐

Experiment: ⭐⭐⭐⭐

Difficulty: Hard

Contact: Yansong Wu (Yansong.wu@tum.de), Dr. Fan Wu (f.wu@tum.de)

Topic 2: learning to sequence skills from human demonstration

Key word: learning by demonstration, imitation learning, skill taxonomy

Main work-packages:

- Analyse a given industrial use case and generate a skill analysis report

- Implement existing method for example Hidden Markov Model to learn skill sequences to solve the use case, using kinesthetic teaching.

- Develop new method based on graph model or behaviour tree representation

- (optional) Develop extension to integrate visual observation

Expected distribution of activities:

Research: ⭐⭐⭐⭐⭐

Software development: ⭐⭐⭐⭐

Experiment: ⭐⭐⭐⭐

Difficulty: Hard

Contact: Yansong Wu (Yansong.wu@tum.de), Dr. Fan Wu (f.wu@tum.de)

Topic 3: Symbolic representation learning

Key word: physics-informed machine learning, computational physics, learning physics laws

Main work-packages:

- Fabricate a double pendulum with varying inertia and motor configuration based on a given design

- Conduct literature survey on recent relevant research works in learning symbolic system equations

- Conduct physical experiment to compare existing developed method with selected baseline

- (optional) develop more benchmarking examples in simulation

Expected distribution of activities:

Research: ⭐⭐⭐⭐⭐

Software development: ⭐⭐⭐

Experiment: ⭐⭐⭐⭐

Difficulty: Hard

Contact: Fernando Diaz Ledezma (fernando.diaz@tum.de), Dr. Fan Wu (f.wu@tum.de)

Topic 4: Simulation environment development for benchmarking collective robot learning

Key word: robot simulation, collective robot learning.

Possible work-packages:

- Get familiar with our current used collective learning algorithm and the MIOS system (a customized proprietary robot operation system designed for collective learning system).

- Integrate MuJoCo simulation engine and bridge the control interface within the software framework.

- Develop a benchmarking environment for robot collective learning tasks, such as, peg-in-hole insertion, pushing objects or obstacle avoidance, etc.

- (optional) algorithm analysis and comparison.

Expected distribution of activities:

Research: ⭐⭐⭐⭐⭐

Software development: ⭐⭐⭐⭐⭐

Experiment: ⭐⭐⭐⭐⭐

Difficulty: Very Hard

Contact: Samuel Schneider (samuel.schneider@tum.de), Yansong Wu (Yansong.wu@tum.de), Dr. Fan Wu (f.wu@tum.de)

Intern (forschungspraxis)

Topic 1: Body exploration based on chaotic oscillators and intrinsic motivation

Key word: machine learning, system identification

Tasks:

- Implement a simulation in Gazebo/Mujoco of the real system

- Survey and implement state-of-the-art model learning algorithms on the simulated system

- Implementation of the selected candidate algorithms of the real planar manipulator for experimental evaluation

Expected outcome: a review of several applicable model learning methods with variable parameters including experimental work on simulated and real systems.

Contact: Fernando Diaz Ledezma (fernando.diaz@tum.de)

Topic 2: integration of force-impedance control with MIOS

Key words: Robot control

Main work-package:

- integrate unifield force-impedance controller to our robot control software stack for collective robot learning system

- provide well-written documentation for the software component developed

- make corresponding example and tutorial

Expected distribution of activities:

Research: ⭐⭐⭐

Software development: ⭐⭐⭐⭐⭐

Experiment: ⭐⭐⭐

Necessary skills:

- C++

- Matlab Simulink

Contact: Yansong Wu (Yansong.wu@tum.de), Dr. Fan Wu (f.wu@tum.de)

Topic 3: Optimal sequential motion generation for compliant robot throwing tasks

Key words: soft/compliant robots, optimal control, motion primitives

Main work-packages:

- Assist the development and fabrication of robot arm driven by variable stiffness actuators

- Develop novel method for sequential motion generation

Expected distribution of activities:

Research: ⭐⭐⭐⭐⭐

Software development: ⭐⭐⭐

Experiment: ⭐⭐⭐⭐

Contact: Dr. Fan Wu (f.wu@tum.de)

Topic 4: objection detection and robot grasping

Key words: Robot vision, grasping

more details later ...

Topic 5: Tool changing mechanism and/or multifunctional gripper design

Key words: AI Factory, tool changer, multifunctional gripper, mechanical designer,

more details later ...

Topic 6: 3D printing postprocessing robotic automation

Key words: additive manufacturing, robot manipulation, automation

more details later ...

Mechatronics System Developement

About us

The TUM Munich Institute of Robotics and Machine Intelligence (MIRMI) is a globally visible interdisciplinary research center for machine intelligence, which is the integration of robotics, artificial intelligence, and perception. Its three central innovation sectors are the future of health, the future of work, the future of the environment, and the future of mobility. More than 60 professors from various TUM faculties cooperate within the framework of MIRMI.

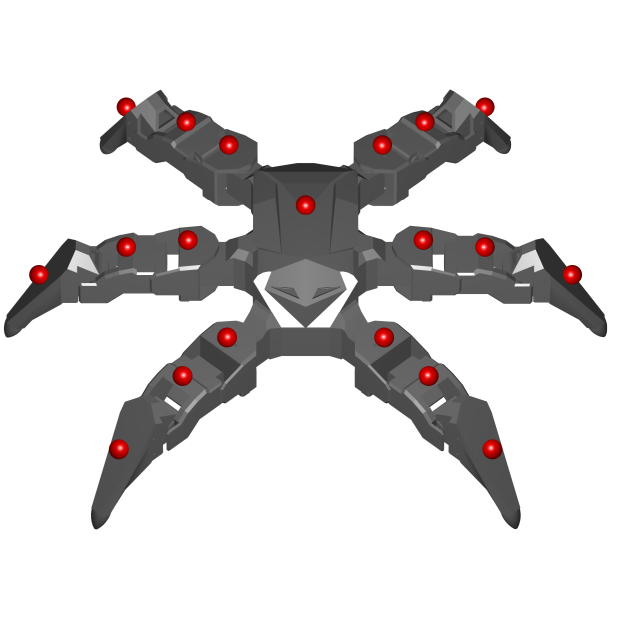

Description

One of the focus areas of our Robot Learning group is self-modeling. We study how robots can autonomously construct and refine their body schema. A simulated platform to implement and test different algorithms for body schema learning is required to complement this work. The Robot Learning Lab will use a simulated hexapod and humanoid robot to meet this requirement.

In this research internship, your first objective will be to define a simulated robot with a rich variety of proprioceptive and tactile sensors. Interfacing the simulation with ROS to facilitate the implementation of control and learning algorithms will be your second objective.

Expected outcome: a fully working simulated robot that receives a variety of control commands and outputs a broad spectrum of sensory signals.

Tasks and responsibilities

- Defining the robot in a simulation environment. This can be Gazebo, Mujoco, or Coppelia Sim

- Adding sensors to the robot to measure joint position, velocity, and torque. Additional force/torque sensors are required

- Adding virtual inertial measurement units to all the bodies

- Adding contact sensors to the end-effector and other areas of the robot’s surface

- Potentially extending the model with compliant joints

- Interfacing the system with ROS, RViz, and MoveIt is expected

- Definition of a Gym environment to use the system in, for example, reinforcement learning settings

Requirements

We appreciate applications from robotics enthusiasts and students who want to pursue a research career in robotics and machine intelligence.

The following skills are required or recommended:

- Strong programming skills (C/C++, Python)

- Experience with Gazebo and ROS

- Familiarity with Linux-based systems

Application

Interested applicants should send the following documents via email to fernando.diaz@tum.de.

- A CV

- Academic transcripts

- (Optional) Code sample, or Github page

- (Optional) Materials of previous projects

The position will be filled as soon as possible, and only shortlisted candidates will be notified. Preferences will be given to applications received before 01.04.2024.

Data Protection Information:

When you apply for a position with the Technical University of Munich (TUM), you are submitting personal information. With regard to personal information, please take note of the Datenschutzhinweise gemäß Art. 13 Datenschutz-Grundverordnung (DSGVO) zur Erhebung und Verarbeitung von personenbezogenen Daten im Rahmen Ihrer Bewerbung. (data protection information on collecting and processing personal data contained in your application in accordance with Art. 13 of the General Data Protection Regulation (GDPR)). By submitting your application, you confirm that you have acknowledged the above data protection information of TUM.

Pos-type: Forschungspraxis/Internship, possible thesis extention. New application deadline: March 31, 2024

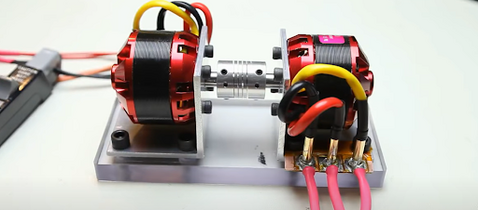

Picture only for representation purposes, source: Dummy load for BLDC controller testing https://youtu.be/n16nrkDgMSA?si=UvVKYjV67vnbA-1a

Brushless motors are growing in popularity for Robotics applications. In particular, due to their high power density, these motors can be used with smaller gear ratios to deliver the torque and speed requirements. For example, the key to MIT mini cheetah's success was BLDC adaptation within the Proprioceptive Actuator concept [1].

Task will be to understand physical properties of BLDC actuators, and be able to mathematically describe them. One should design test scenarios and program the control for them (that includes programming absorber side - how the loading will look like, alongside the motor being tested). Collect, visualise and analyse data from experiments (plotting power, efficiency, etc.). Make conclusions about the relation between physical properties of different actuators (high level design choices like inrunner or outrunner, number of poles, control algorithm, etc.) and collected results. It's worth mentioning that you will not start from scratch and will be supported by inhouse developed solutions for BLDC control, available testbed, etc.

Please check attached PDF for a bit more detailed description (To open it, click on the Picture above).

What you will gain:

- Hands-on experience and in-depth understanding of Brushless Motors, and their control

- Visualising and analysing the data

- Best practices for Embedded software development

- Experience building, prototyping, 3d Printing

- Working with DataSheets and Documentations of various Devices

- Hacking electronic signals (via oscilloscope, etc.)

- Insights in our System Development and access to our community

Requirements from candidates:

- Knowledge of C, Matlab

- Working skills in Ubuntu operating system

- Understanding how Motors work

- Basics in Electronics and Mechanics

- Proficiency in English C1, reading academic papers

- Plus are:

- Familiarity with GIT

- Embedded software development

- Robotics

To apply, you can send your CV, and short motivation to the Supervisors (with the Senior Supervisor in cc)

Supervisors

| M.Sc. Vasilije Rakcevic vasilije.rakcevic@tum.de | M.Sc. Edmundo Pozo Fortunić edmundo.pozo@tum.de |

Senior Supervisor

Dr.-Ing. Abdalla Swikir

abdalla.swikir@tum.de

[1] P. M. Wensing, A. Wang, S. Seok, D. Otten, J. Lang and S. Kim, "Proprioceptive Actuator Design in the MIT Cheetah: Impact Mitigation and High-Bandwidth Physical Interaction for Dynamic Legged Robots," in IEEE Transactions on Robotics, vol. 33, no. 3, pp. 509-522, June 2017, doi: 10.1109/TRO.2016.2640183.

Pos-type: Forschungspraxis/Internship, possible thesis extention. New application deadline: March 31, 2024

Picture only for representation purposes, source: https://youtu.be/n16nrkDgMSA?si=UvVKYjV67vnbA-1a

Brushless motors are growing in popularity for Robotics applications. They are particularly interesting due to their power density and availability. A good example of its abilities is MIT mini cheetah success with the Proprioceptive Actuator concept [1]. There, leveraging low gear ratio, back-drivability, high torque(power) density, they have been able to develop a powerful enough and stable actuator even for acrobatic maneuvers.

We are working on our own solutions for BLDC actuation. For that purpose, we have developed controllers that are the heart of all recent hardware developments [2] We are looking to enhance them and better integrate them within other projects.

Please check attached PDF for a bit more detailed description (To open it, click on the Picture above).

What you will gain:

- Hands-on experience and in-depth understanding of IMU

- Understanding Motor Control and various aspects of DC motors

- Best practices for Embedded software development

- Working with DataSheets and Documentations of various Devices

- Hacking electronic signals (via oscilloscope, etc.)

- Insights in our System Development and access to our community

Requirements from candidates:

- Knowledge of C

- Basics of Microcontroller programming

- Basics in Electronics and Mechanics

- Proficiency in English C1, reading academic papers

- Plus are:

- Arduino programming

- Familiarity with GIT

We are welcoming initiative and always aiming to support new ideas. This internship is great opportunity to get familiar with our work and gain a lot of knowledge in hands-on Embedded system development.

To apply, you can send your CV, and short motivation to the Supervisor (with the Senior Supervisor in cc)

Supervisor

| M.Sc. Vasilije Rakcevic vasilije.rakcevic@tum.de | M.Sc. Edmundo Pozo Fortunić edmundo.pozo@tum.de |

Senior Supervisor

Dr.-Ing. Abdalla Swikir

abdalla.swikir@tum.de

[1] P. M. Wensing, A. Wang, S. Seok, D. Otten, J. Lang and S. Kim, "Proprioceptive Actuator Design in the MIT Cheetah: Impact Mitigation and High-Bandwidth Physical Interaction for Dynamic Legged Robots," in IEEE Transactions on Robotics, vol. 33, no. 3, pp. 509-522, June 2017, doi: 10.1109/TRO.2016.2640183.

[2] Fortunić, E. P., Yildirim, M. C., Ossadnik, D., Swikir, A., Abdolshah, S., & Haddadin, S. (2023). Optimally Controlling the Timing of Energy Transfer in Elastic Joints: Experimental Validation of the Bi-Stiffness Actuation Concept. arXiv [Eess.SY]. Retrieved from http://arxiv.org/abs/2309.07873

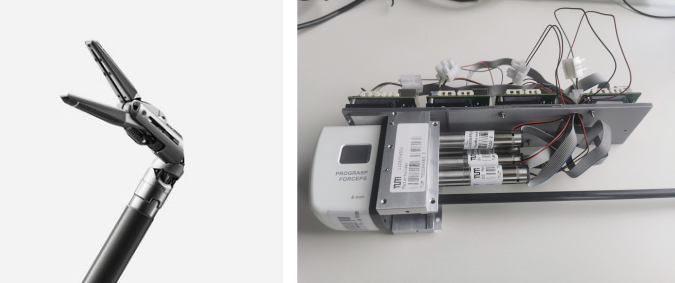

The development of the tactile and dexterous end effectors for the surgical robotic system will require the expertise to design and integrate sensing interfaces in a highly compact space. Moreover, it will be important to oversee the general robotic testbed development including the control and sensing architecture for seamless integration of systems from the bottom-up. The operator would require the crucial sense of touch with low-magnitude normal and shear forces as well as sensing of multiple contact points. Additionally, the end effectors need to be modular to handle different surgical tools. Furthermore, this sensory information needs to be translated to the operator side and be integrated into the overall control and sensing architecture of the robotic system.

In our institute we already have developed an actuated EndoWrist. It comprises four motors that are connected to the pullies which in turn through a cable mechanism drive the joints of the EndoWrist.

The research in this master's thesis primarily focuses on the kinematic modeling of available EndoWrist in order to obtain the coupled transformation between the motor space and joint space. After successful implementation, the thesis will progress to dynamic identification (emphasizing friction modeling in the EndoWrist) as well as external torque estimation through the current measurement. Finally, the proposed model will be validated on a testbed.

Prerequisites

- Good Matlab skills

- Good understanding of coordinate frames and 3d transformations

- Excellent mathematic knowledge specifically Linear Algebra

- Creative and independent thinker

Helpful but not required

- Experience with ROS

- Experience with Maxon motor controller

- Python, Cpp

Related Literature:

- Lee, C., Park, Y. H., Yoon, C., Noh, S., Lee, C., Kim, Y., ... & Kim, S. (2015). A grip force model for the da Vinci end-effector to predict a compensation force. Medical & biological engineering & computing, 53, 253-261.

- S. Kim and D. Y. Lee, "Friction-model-based estimation of interaction force of a surgical robot," 2015 15th International Conference on Control, Automation and Systems (ICCAS), Busan, Korea (South), 2015, pp. 1503-1507, doi: 10.1109/ICCAS.2015.7364591.

- Longmore, S. K., Naik, G., & Gargiulo, G. D. (2020). Laparoscopic robotic surgery: Current perspective and future directions. Robotics, 9(2), 42.

- Guadagni, S., Di Franco, G., Gianardi, D., Palmeri, M., Ceccarelli, C., Bianchini, M., ... & Morelli, L. (2018). Control comparison of the new EndoWrist and traditional laparoscopic staplers for anterior rectal resection with the Da Vinci Xi: a case study. Journal of Laparoendoscopic & Advanced Surgical Techniques, 28(12), 1422-1427.

- Abeywardena, S., Yuan, Q., Tzemanaki, A., Psomopoulou, E., Droukas, L., Melhuish, C., & Dogramadzi, S. (2019). Estimation of tool-tissue forces in robot-assisted minimally invasive surgery using neural networks. Frontiers in Robotics and AI, 6, 56.

For more information, please contact:

Mario Tröbinger (mario.troebinger@tum.de)

Dr Hamid Sadeghian (hamid.sadeghian@tum.de)

Location:

Forschungszentrum Geriatronik, MIRMI, TUM, Bahnhofstraße 37, 82467 Garmisch-Partenkirchen.

MIRMI, TUM, Georg-Brauchle-Ring 60-62, 80992 München.

Collaborative robots (cobots) are robots designed to work alongside humans and share tasks with them. This

cooperation enables applications that goes beyond the uses of traditional caged-off industrial robots, which only goal is performing specific and repetitive tasks in a pre-defined sequence. On the other hand, cobots can be used in diverse scenarios—for instance, in domestic environments cobots could be used for tasks such as cooking and cleaning as well many other basic activities of daily living. Before employing cobots in domestic environments it will be essential to attain the following objectives O1 and O2:

O1 End-users should be able to teach new tasks to the cobot and interact with it without the need for extensive programming knowledge.

O2 Cobots should be able to navigate through obstacles and avoid collisions with objects to perform their tasks efficiently and safely.

One way to accomplish the first objective is by the Learning from Demonstration (LfD) paradigm, that aims to teach robots how to learn the meaning of a task by generalizing from observing several demonstrations, rather than simply recording and replaying. The fundamental principle of this paradigm is that it allows end-users to teach robots how to perform new tasks by providing them with examples of a task being performed, eliminating the need for coding on them part. An established method to implement LfD in robots is by encoding demonstrations in stable dynamical

systems (DS). More precisely, the position and velocity of the end-effector (or of the joints) are recorded for each demonstration, and optimization algorithms are used to find a stable dynamical system as close as possible to the vector field associated to these demonstrations. Moreover, by guaranteeing that the endpoint of the demonstrated trajectory is a globally asymptotically stable equilibrium point of the DS, it is possible to guarantee that the robot will always move to the goal, even if it starts from different initial conditions or if it is pushed away in the middle of the trajectory.

Concurrently, it is important that cobots learn how to realize these demonstrated tasks while avoiding obstacles to prevent collisions with furniture, walls, and other objects in the home, which could cause damage or injury to people or pets. While learning stable DSs realizes the first objective O1, the vanilla techniques do not solve O2 by themselves: most techniques that learn stable dynamical systems should be adapted to account for the presence of static and/or dynamic objects [1, 2].

By integrating techniques for learning stable dynamical systems with state-of-art obstacle avoidance meth-

ods, such as novel techniques in the literature of control-barrier functions and linear temporal logic, this thesis will be an investigation of innovative methods for learning stable DS with obstacle avoidance – solving objectives O1 and O2, and enabling cobots to learn how to perform tasks robustly and safely.

References

[1] S. M. Khansari-Zadeh and A. Billard, “A dynamical system approach to real-time obstacle avoidance,”

Autonomous Robots, vol. 32, pp. 433–454, 2012.

[2] L. Huber, J.-J. Slotine, and A. Billard, “Fast obstacle avoidance based on real-time sensing,” IEEE Robotics

and Automation Letters, 2022.

Contact:

Dr.Ing. Abdalla Swikir abdalla.swikir@tum.de

Dr. Hugo Kussaba hugo.kussaba@tum.de

Location: RSI ,MIRMI, TUM, Georg-Brauchle-Ring 60-62, 80992 München.

Forschungspraxis / Internship / Bachelor Thesis / Master Thesis

Background

Accurate pose estimation of surgical tools is paramount in the field of robotic surgery, helping to increase precision and ultimately improve patient outcomes. Relying solely on forward kinematics often falls short, unable to account for various uncertainties such as cable compliance of endowrists, motor backlash, and environmental noise, among others.

In our lab, we developed a cutting-edge surgical testbed with three Franka Emika robots, equipped with an professional endoscope and endowrists. This setup provides a unique platform to tackle real-world challenges in robotic surgery.

To enhance autonomy in robotic surgery, we are looking to develop an innovative pose estimation algorithm utilizing endoscopic images. This thesis opportunity aims to tackle this exciting challenge, potentially making a significant contribution to the future of robotic surgery.

Tasks

- Perform camera calibration for the endoscopes

- Develop a pose estimation algorithm for endowrists

- Evaluate the methods on our robotic surgery setup

Prerequisites

- Good Python & C++ programming skills / ROS 2

- Good understanding of state estimation algorithms, e.g. Kalman filter/particle filter

- Good knowledge of computer vision, e.g. camera calibration, feature extraction

- Goal-oriented mentality and motivation about the topic

References

[1] Moccia, Rocco, et al. "Vision-based dynamic virtual fixtures for tools collision avoidance in robotic surgery." IEEE Robotics and Automation Letters 5.2 (2020): 1650-1655.

[2] Staub, Christoph. Micro Endoscope based Fine Manipulation in Robotic Surgery. Diss. Technische Universität München, 2013.

[3] Richter F, Lu J, Orosco R K, et al. Robotic tool tracking under partially visible kinematic chain: A unified approach[J]. IEEE Transactions on Robotics, 2021, 38(3): 1653-1670.

Contact

Zheng Shen(zheng.shen@tum.de)

Fabian Jacob(fabian.jakob@tum.de)

Chair of Robotics Science and Systems Intelligence, Munich Institute of Robotics and Machine Intelligence (MIRMI)

Development and Evaluation of low level microcontroller firmware and PCB Design

At MIRMI, we aim to develop the next generation of artificial hands for robotics and porsthetics. In order to achieve this, we need to develop the necessary components to bring to our designs cutting edge technology based on the state of the art. Within our project, we attempt to design, test and combine the best tactile sensors, finger designs, actuators, driving mechanisms, control sensors, control strategies and so on. In order to do these, we need to provide an electronic platform that is able to provide the necessary resources for each component design and be able to easily adapt to the different changes and revisions that need to be made. This includes: motor controllers, real-time communication modules, sensor data acquisition, etc.

We are currently looking for a student to work with us part-time within our a multidisciplinary team comprised of mechanical, electronic, mechatronic and control engineers and students.

Type: Wissenschaftliche Hilfskraft (HiWi Position), 10-20h/ Woche

Short-term Tasks:

1. Design, implementation and testing of microcontroller firmware of our Multi-modal sensor data acquisition PCB for our Hand Palm.

Long-term Tasks:

- Maintance of our firmware database and development of new firmware for electronic components based on the Microchop ATSAM (arm) family of microcontrollers.

- Design, Implementation and Testing of new PCBs for our new prototypes.

Requirements:

- C programming skills (preferably in microcontroller programming). Knowledge and Experience with Atmel/Microchip Studio is preferred.

- Knowledge and experience in PCB design using Autodesk EAGLE (or FUSION).

- Good experience using git.

- Knowledge in Simulink/matlab.

What we expect from you:

- Motivation to work in an outstanding team of researchers and contribute to our scientific work and help to buid-up our laboratory infrastructure

- Autonomous working habits

What we can offer:

- Highly dynamic scientific environment

- Working at the interface between human and technology

- Investigation of real world problems

- Interdisciplinary topics

We currently also offer various topics in the field of mechanical engineering, electrical engineering, informatics and comparable backgrounds. Please contact us for further information on current topics.

Contact:

Please send me an email including:

- CV

- Overview of courses with grades (only students with a grade average of 2.5 or better)

- Short statement of motivation and the topics you are interested in

Data Protection Information

When you apply for a position with the Technical University of Munich (TUM), you are submitting personal information. With regard to personal information, please take note of the Datenschutzhinweise gemäß Art. 13 Datenschutz-Grundverordnung (DSGVO) zur Erhebung und Verarbeitung von personenbezogenen Daten im Rahmen Ihrer Bewerbung. (data protection information on collecting and processing personal data contained in your application in accordance with Art. 13 of the General Data Protection Regulation (GDPR)). By submitting your application, you confirm that you have acknowledged the above data protection information of TUM.