Spatial perception with hearing aids

Spatial perception of sounds comprises aspects of spatial location such as the distance, azimuth, elevation and source width of sounds. Normal hearing people are very good at localizing a sound in space. Numerous studies have shown a resolution of up to 1 degree for horizontal localization, based mainly on the processing of time (ITDs) and level (ILDs) differences between the ears. Other aspects of spatial perception rely on further cues, for example elevation and front-back discrimination on the spectral filtering of incoming sounds by the outer ear, distance perception on the sound’s intensity and the amount of reverberation, and the perceived width of a source on the similarity of the sound on both ears. When using hearing aids, many of these cues are affected by the microphone location (behind the ear or at the entrance of the ear canal), the bandwidth and frequency response of the hearing aid, and by the algorithms used in the devices. These “technical” aspects interact with the degradation of the auditory system by hearing impairment, making it hard for many hearing aid users to spatially separate competing sound sources.

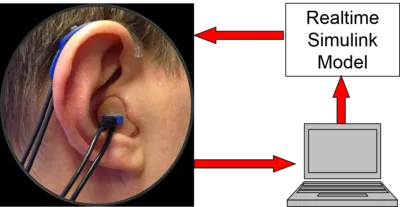

Many of these aspects are not well understood and our aim is to research and model the problems of auditory scene analysis and spatial perception related to hearing loss and the use of hearing aids. In experiments with listeners we often use a real-time system to study new hearing aid algorithms (see Figure 1). The participant sits in a ring of loudspeakers, our Simulated Open Field environment, and listens to the sound with hearing device dummies like in everyday life. We test, e.g., perceived distance and other spatial aspects for different hearing aid parameters. For example for distance perception we found differences when sounds are presented in the front and in the back or if they were picked up with microphones behind and in the ear (Gomez & Seeber 2015, 2016). We also investigate sound quality, comparing different algorithms and microphone positions by means of the “semantic differential” method.

We thank the Phonak AG for supporting our research.

The SOFE lab is funded by BMBF 01 GQ 1004B.

Staff

Current:

Norbert Kolotzek

Past:

Gabriel Gomez

Ian Wiggins

Selected Publications

Gomez, G.; Seeber, B.U.: Distanzwahrnehmung in virtuellen Räumen für Schalle von vorne und hinten. Fortschritte der Akustik -- DAGA '15, Dt. Ges. f. Akustik e.V. (DEGA), pp. 1628-1629, 2015.

Gomez, G.; Seeber, B.U.: Influence of the Hearing Aid Microphone Position on Distance Perception and Front-Back Confusions with a Static Head. Proc. 18. Jahrestagung Deutsche Gesellschaft für Audiologie e.V. (DGA), Dt. Ges. f. Audiologie, pp. 1-5, 2015.

Wiggins, I.M.; Seeber, B.U.: "Linking dynamic-range compression across the ears can improve speech intelligibility in spatially separated noise". J. Acoust. Soc. Am. 133 (1), 1004-1016, 2013.