The TUM-IITKGP Gait Database

Introduction to Gait Recognition

Person identification by biometric features is a well established research area. The main focus has so far been on physiologic features such as face, iris and fingerprint. In addition, behavior based features such as voice, signature and gait can be used for person identification. The main advantage of using these features over other physiologic features is the possibility to identify people from large distances and without the person’s direct cooperation. For example, in low resolution images, a person’s gait signature can be extracted, while the face is not even visible. Also no direct interaction with a sensing device is necessary, which allows for undisclosed identification. Thus gait recognition has great potential in video surveillance, tracking and monitoring.

Studies suggest that if all gait movements are considered, gait is unique. These findings are the basis of the assumption that recognition using only gait must also be possible for a computer system. Over the last decade the field of recognizing people using gait features has received remarkable attention. A multitude of methods and techniques in feature extraction as well as in classification have been developed. Experiments are promising and encouraging.

Why need a new database?

While good datasets for training and evaluation are available, we find that all of them ignore to address one important challenge: The challenge of occlusions. Occlusions are annoying but are unfortunately omnipresent in practice. Especially in a real word surveillance scenario, occlusions occur frequently. Typical gait recognition algorithms require a full gait cycle for recognition. In the case of occlusion, however, it becomes a challenging problem to extract a full gait cycle. In heavy occlusion, parts of the gait cycle might be visible, while other parts are obscured by another person walking in front. The challenge then lies in stitching together parts of different gait cycles in order to obtain one complete gait cycle. Alternatively gait recognition algorithms could be developed for which parts of the gait cycle are sufficient. While to date, no algorithm is capable of handling partially observable gait cycles, we here present the TUMIITKGP gait dataset, which can be used to specifically address occlusions.

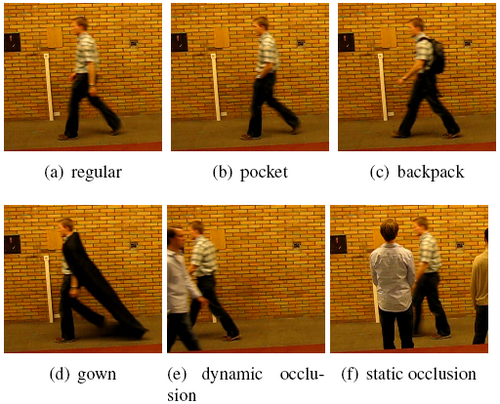

To this end the presented database includes recordings with two kinds of occlusions. On the one hand dynamic occlusions by people walking in the line of sight of the camera and on the other hand static occlusions by people who are occluding the person of interest by standing in the scene. In addition to specifically addressing the occlusion challenge, the TUM-IITKGP dataset also features three new configuration variations, which allows to test algorithms for their capability of handling changes in appearance.

Description of the database

Human gait is an important biometric feature for identification of people. We present a new dataset for gait recognition. The presented database overcomes a crucial limitation of other state-of-the-art gait recognition databases. More specifically this database addresses the problem of dynamic and static inter object occlusion. Furthermore this dataset offers three new kinds of gait variations, which allow for challenging evaluation of recognition algorithms.

For details on the database please see our WSCG paper, which also presents baseline algorithms.

Download

The database is provided as one compressed zip file.

File Format

The files are named "IDxxx_y.avi", where xxx is the ID of the person, y is the recording configuration (i.e. 1=regular, 2=handinpocket, 3=backpack, 4=gown, 5=dynamicOcclusion, 6=staticOcclusion).

Download

Download TUM-ITTKGP Gait Database

Acknowledgement

If you use this database in your research, please cite the following paper:

- Martin Hofmann, Shamik Sural, Gerhard Rigoll: "Gait Recognition in the Presence of Occlusion: A New Dataset and Baseline Algorithms", In: 19th International Conferences on Computer Graphics, Visualization and Computer Vision (WSCG), Plzen, Czech Republic, Jan 31 - Feb 3, 2011

The recording of this data has been partially funded by the European Projects FP-214901 (PROMETHEUS) as well as by Alexander von Humboldt Fellowship for experienced researchers.