Scaled Hand Gesture Dataset

Talking with Your Hands: Scaling Hand Gestures and Recognition with CNNs

The use of hand gestures provides a natural alternative to cumbersome interface devices for Human-Computer Interaction (HCI) systems. As the technology advances and communication between humans and machines becomes more complex, HCI systems should also be scaled accordingly in order to accommodate the introduced complexities. In this paper, we propose a methodology to scale hand gestures by forming them with predefined gesture-phonemes. The total number of possible hand gestures can be increased exponentially by increasing the number of used gesture-phonemes. For this objective, we introduce a new benchmark dataset named Scaled Hand Gestures Dataset (SHGD) with only gesture-phonemes in its training set and 3-tuples gestures in the test set.

SHGD contains 15 single hand gestures, each recorded for infrared (IR) and depth modalities using Infineon® IRS1125C REAL3 TM 3D Image Sensor. Each recording contains 15 gesture samples. There are in total 324 recordings from 27 distinct subjects in the dataset. Recordings of 8 subjects are reserved for testing, which makes 30% of the dataset. Each recording contains 15 gesture samples, one gesture for all classes. Every subject makes 12 video recordings using two hands under 6 different environments, which are designed for increasing the network robustness against different lightning conditions and background disturbances. These environments are (1) indoors under normal daylight, (2) indoors under daylight and with an extra person in the background, (3) indoors at night under artificial lighting, (4) indoors in total darkness, (5) outdoors under intense sunlight and (6) outdoors under normal sunlight. We have simulated outdoor environments using two bright lights: Two lights for “intense sunlight” and one light for “normal sunlight”.

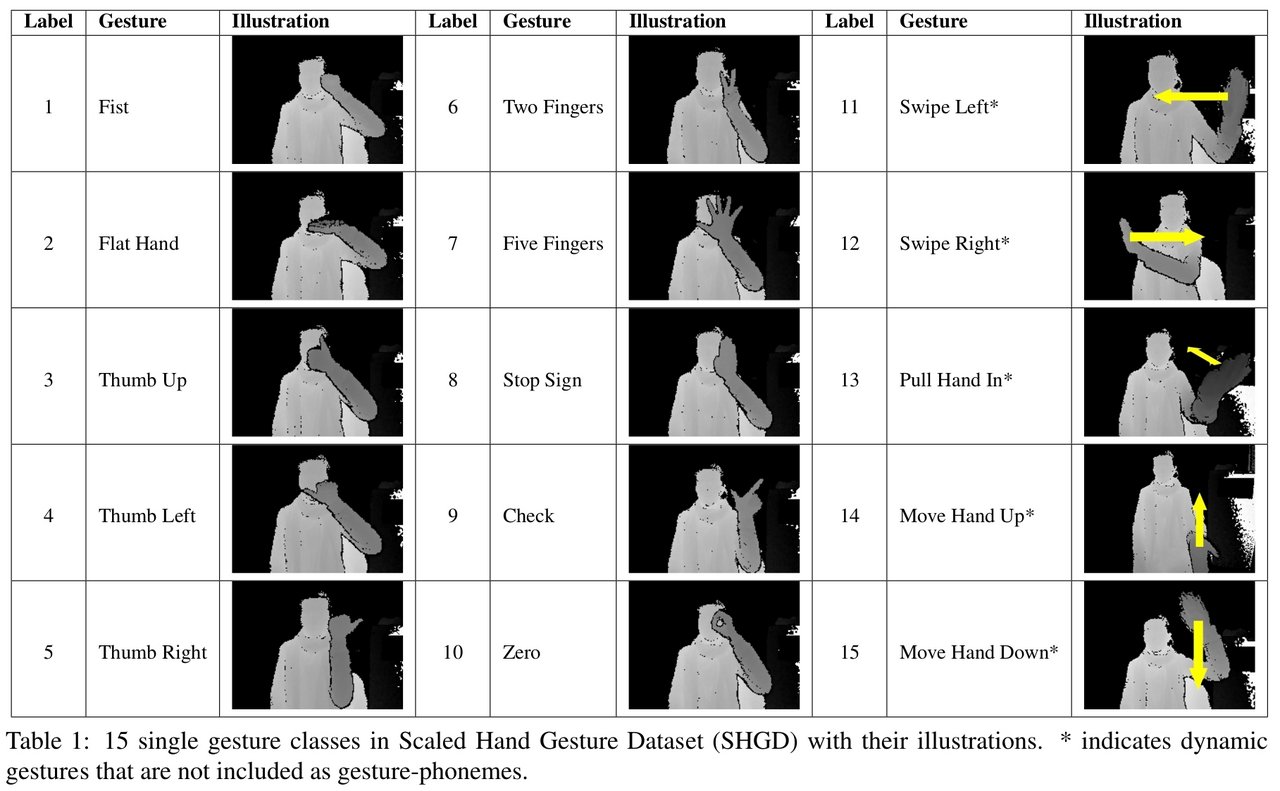

- Single Gestures: In its training set, SHGD contains only single gestures under 15 classes, which are demonstrated in Table 1. Recordings in the dataset are continuous video streams meaning that each recording contains no-gesture and gesture parts. Moreover, each gesture contains preparation, nucleus and retraction phases, which are critical for real-time gesture recognition. Among the single gesture classes listed in Table 1, static gestures are selected as gesture-phonemes since it is more convenient to perform different static gestures sequentially.

- Gesture Tuples: Gesture tuple refers to hand gestures which contain sequentially performed phonemes. There are in total 10 different phonemes. When constructing gesture tuples, we leave out the consecutive same phonemes to avoid sequence length confusion. Therefore, the total number of different tuples can be calculated by N = m(m − 1)(s−1), where m is the number different phonemes and s is the number of phonemes that the gesture tuple contains. Besides the test set for single gestures, SHGD also has a test set for gesture tuples containing 3 phonemes. 5 subjects perform gesture tuples under 5 different lightning conditions (excluding the environment of (2)). There are in total 10×(10−1)(3−1) = 810 permutations meaning different classes for 3-tuple gestures. Recordings are not segmented for this case. Therefore, one recording contains no-gesture, 3-tuple gesture and no-gesture without exact location of 3-tuple gesture. Since gestures are performed at different speeds in the real-life scenarios, we have also collected 3-tuple gestures at three different speeds: Slow, medium and fast. The subjects should finish 3-tuple gestures within 300 frames (6.7 sec), 240 frames (5.3 sec) and 180 frames (4 sec) for slow, medium and fast speed, respectively.

SHGD-15 and SHGD-13

SHGD-15 refers to the standard dataset where all single gestures in Table 1 are included. On the other hand, SHGD-13 is specifically designed for 3-tuple gesture recognition. Besides 10 phonemes, SHGD-13 also contains preparation (raising hand), retraction (lowering hand) and no-gesture classes. As there is no indication when a gesture starts and ends in the video, we use preparation and retraction classes to detect Start-of-Gesture (SoG) and End-of-Gesture (EoG). We use no-gesture class to reduce the number false alarms since most of the time, no gesture is performed in real-time gesture recognition applications. SHGD-15 is a balanced dataset with 96 samples in each class. However, SHGD-13 is an imbalanced dataset, where preparation and retraction classes contains 10 times more samples than phonemes, whereas no-gesture contains around 20 times more samples than phonemes. Therefore, training of SHGD-13 requires special attention.

Download

The database is made freely available for research purposes.

You can download the dataset in 5 parts below. By downloading the dataset, you automatically agree the license terms stated in SHGD_License.pdf.

Implementation

Link to the code can be accessed at: https://github.com/yaorong0921/GeScale

Citation & Contact

All documents and papers that report on research that uses the SHGD will acknowledge the use of the dataset by including an appropriate citation to the following:

@inproceedings{kopuklu2019talking,

title={Talking with your hands: Scaling hand gestures and recognition with cnns},

author={Kopuklu, Okan and Rong, Yao and Rigoll, Gerhard},

booktitle={Proceedings of the IEEE International Conference on Computer Vision Workshops},

year={2019}

}

Contact:

Okan Köpüklü, M.Sc.

okan.kopuklu@tum.de

Yao Rong, M.Sc.

yao.rong@uni-tuebingen.de