Driver Micro Hand Gestures (DriverMHG) Dataset

There are a lot of vision-based datasets publicly available, but for the specific task of classifying driver micro hand gestures on a steering wheel, there is none. For this purpose, we recorded the Driver Micro Hand Gesture (DriverMHG) dataset, which fulfills the following criteria:

- Large enough to train a Deep Neural Network

- Contains the desired labeled gestures

- The distribution of labeled gestures is balanced

- Has 'none' and 'other' action classes to enable continuous classification

- Has the ability to allow benchmarking

DriverMHG Dataset Collection

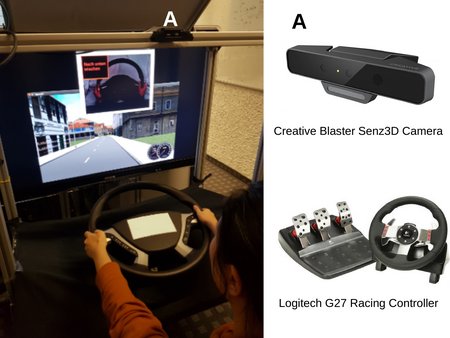

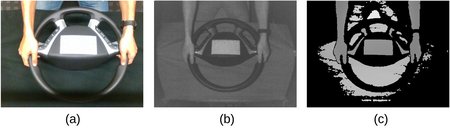

In order to record this dataset, a driving simulator has been set up as shown in figure above. The dataset is recorded with the help of 25 volunteers (13 males and 12 females) using this simulator, which consists of a monitor, a Creative Blaster Senz3D camera featuring Intel RealSense SR300 technology, a Logitech G27 racing controller, whose wheel is replaced with a truck steering wheel and the OpenDS driving simulator software. The dataset is recorded in synchronized RGB, infrared and depth modalities with the resolution of 320 x 240 pixels and the frame rate of 30 fps. Example recordings for the three modalities are shown in figure below.

For each subject, there are in total 5 recordings each containing 42 gestures for 5 different gestures together with other and none gestures for each hand. Each recording of a subject was recorded under different lightning conditions: At room lights, at darkness, with external light source from left, with external light source from right and under intensive lightning from both sides. We randomly shuffled the order of the subjects and split the dataset by subject into training (72\%) and testing (28\%) sets. Recordings from subject 1 to 18 (including) belong to the training set, and recordings from subject 19 to 25 (including) belong to the test set.

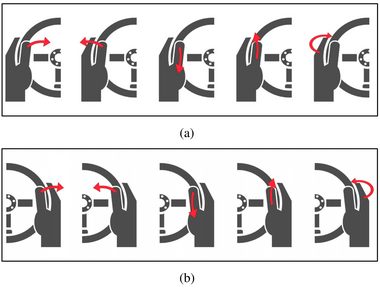

The micro gestures that the subjects had to perform should be natural and should neither distract them nor require them to take their hands off the wheel while performing. They should also be quickly executable. Therefore, five micro gestures were selected, which are 'swipe right', 'swipe left', 'flick down', 'flick up' and 'tap'. The former four gestures require the movement of only thumb, while "\textit{tap}" is performed by slightly patting the side of wheel with four fingers. Figure below shows the illustration of the selected five micro gestures for the left and right hands.

Additionally, we introduce the 'other' and 'none' gestures. For each record, three 'none' and 'other' gestures were specifically selected from the recorded data. With 'other' label, the network learns the drivers' other movements when a gesture is not performed. Whereas, with 'none' label, the network learns that the drivers' hands are steady (i.e. there is no movement or gestures). The inclusion of 'none' and 'other' action classes in the recorded dataset enables robust online recognition due to the availability of continuous analysis. Regarding the annotations, the gestures were annotated by hand with their starting and ending frame number.

DriverMHG Dataset Statistics

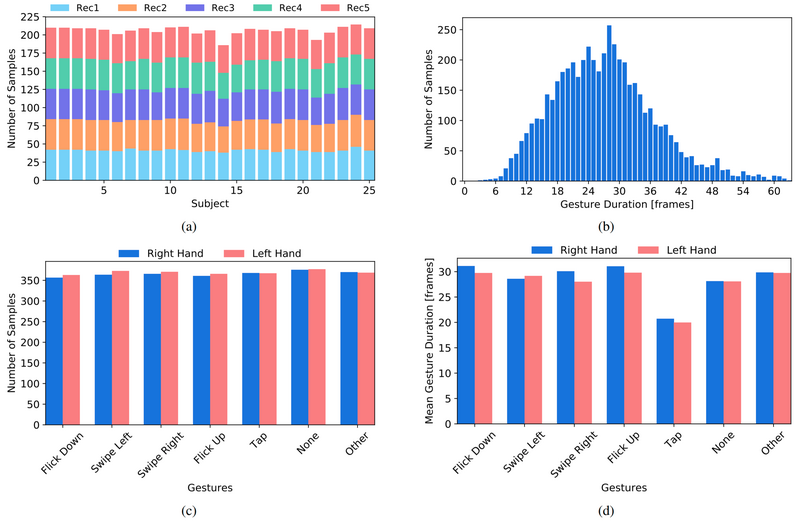

Figure below shows the statistics of the collected dataset: (a) shows the number of samples from 5 recordings for each subject, which is around 210; (b) shows the histogram of the gesture duration (frames); (c) shows that the number of samples for each class are balanced for both right and left hands; (d) shows the mean gesture duration for each action class. This figure shows that 'tap' action can be executed very fast compared to the other action classes. Mean gesture durations for 'none' and 'other' action classes are kept quite similar to the 'flick down/up' and 'swipe left/right' action classes.

Download

The database is made freely available for research purposes.

You can download the dataset in 4 parts below. By downloading the dataset, you automatically agree the license terms stated in DriverMHG_License.pdf.

Implementation

We provide our code that is used to train/evaluate our methodology. The code is developed using Efficient-3D-CNNs and Real-time-GesRec projects. Please read the README in the zip file to successfully run the code.

Citation & Contact

All documents and papers that report on research that uses the DriverMHG dataset will acknowledge the use of the dataset by including an appropriate citation to the following:

@article{kopuklu2020drivermhg,

title={DriverMHG: A Multi-Modal Dataset for Dynamic Recognition of Driver Micro Hand Gestures and a Real-Time Recognition Framework},

author={K{\"o}p{\"u}kl{\"u}, Okan and Ledwon, Thomas and Rong, Yao and Kose, Neslihan and Rigoll, Gerhard},

journal={arXiv preprint arXiv:2003.00951},

year={2020}

}

Contact:

Okan Köpüklü, M.Sc.

okan.kopuklu@tum.de