Driver Anomaly Detection (DAD) Dataset

There are several vision-based driver monitoring datasets that are publicly available, but for the task of open set recognition such that normal driving should still be distinguished from unseen anomalous actions, there has been none. In order to fill this research gap, we have recorded the Driver Anomaly Detection (DAD) dataset, which contains the following properties:

- The DAD dataset is large enough to train a Deep Neural Network architectures from scratch.

- The DAD dataset is multi-modal containing depth and infrared modalities such that system is operable at different lightning conditions.

- The DAD dataset is multi-view containing front and top views. These two views are recorded synchronously and complement each other.

- The videos are recorded with 45 frame-per-second providing high temporal resolution.

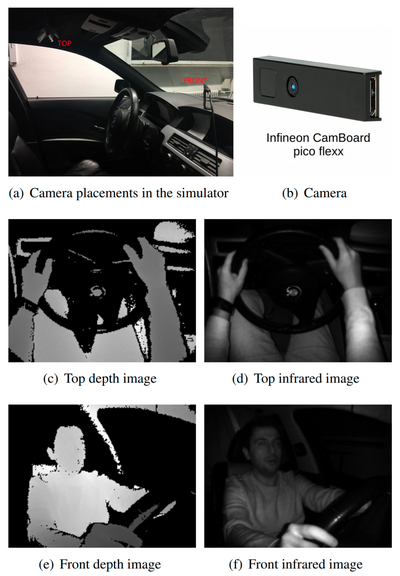

We have recorded the DAD dataset using a driving simulator that is shown in Fig. 1. The driving simulator contains a real BMW car cockpit, and the subjects are instructed to drive in a computer game that is projected in front of the car. Two Infineon CamBoard pico flexx cameras are placed on top and in front of the driver. The front camera is installed to record the drivers' head, body and visible part of the hands (left hand is mostly obscured by the driving wheel), while top camera is installed to focus on the drivers' hand movements. The dataset is recorded in synchronized depth and infrared modalities with the resolution of 224 x 171 pixels and frame rate of 45 fps. Example recordings for the two views and two modalities are shown in Fig. 1.

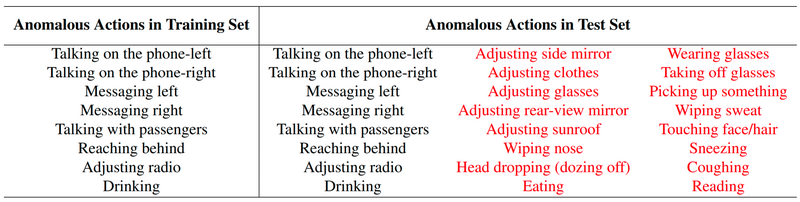

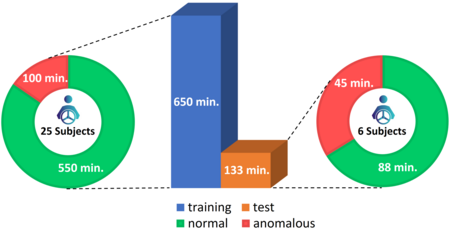

For the dataset recording, 31 subjects are asked to drive in a computer game performing either normal driving or anomalous driving. The training set contains recordings of 25 subjects and each subject has 6 normal driving and 8 anomalous driving video recordings. Each normal driving video lasts about 3.5 minutes and each anomalous driving video lasts about 30 seconds containing a different distracting action. The list of distracting actions recorded in the training set can be found in Table 1. In total, there are around 550 minutes recording for normal driving and 100 minutes recording of anomalous driving in the training set.

The test set contains 6 subjects and each subject has 6 video recordings lasting around 3.5 minutes. Anomalous actions occur randomly during the videos. Most importantly, there are 16 distracting actions in the test set that are not available in the training set, which can be found in Table 1. Because of these additional distracting actions, the networks need to be trained according to open set recognition task and distinguish normal driving no matter what the distracting action is. The complete test consists of 88 minutes recording for normal driving and 45 minutes recording of anomalous driving. The test set constitutes the 17% of the complete DAD dataset, which is around 95 GB. The dataset statistics can be found in Fig. 2.

Download

The DAD dataset is made freely available for research purposes.

You can download the dataset in 10 parts below. By downloading the dataset, you automatically agree the license terms stated in DAD_License.pdf.

Annotations for open and closed set samples for the test data can be downloaded via open_closed_set_annotations_for_test.csv

Visual Examples

Using the contrastive learning proposed in the article Driver Anomaly Detection: A Dataset and Contrastive Learning Approach, the following can be acquired:

Citation & Contact

All documents and papers that report on research that uses the DAD dataset will acknowledge the use of the dataset by including an appropriate citation to the following:

@article{kopuklu2020driver,

title={Driver Anomaly Detection: A Dataset and Contrastive Learning Approach},

author={K{\"o}p{\"u}kl{\"u}, Okan and Zheng, Jiapeng and Xu, Hang and Rigoll, Gerhard},

journal={arXiv preprint arXiv:2009.14660},

year={2020}

}

Contact:

Okan Köpüklü, M.Sc.

okan.kopuklu@tum.de